Amazon database migration service - first try

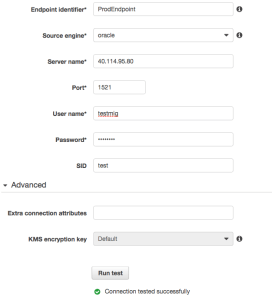

The test for target endpoint failed with the same timeout, but the reason was totally different. It was not DNS, but rather a connection issue. At first, I couldn't figure that out because I was able to connect to my RDS instance from my laptop using server name and port but test endpoint in DMS was not working. Eventually I figured out that the problem was in security groups for endpoint in RDS. By default the AWS RDS instance was created with security group allowing connections outside but somehow restricting connections from DMS. I changed the security group for AWS RDS to "default" and was able to successfully test the endpoint in DMS. The next step was to create a task. I created a task with initial load and ongoing replication for my testmig schema. The task was supposed to drop any tables on the target (you can choose truncate instead if you want) create objects, move data and keep replication until cutover day when you will be able to switch your applications to the new database. It will tell you that you need to setup supplemental logging for replication. Unfortunately it doesn't tell you what kind of supplemental logging you have to setup. So, I enabled minimal data supplemental logging on my Azure test instance. [code lang="sql"] test> alter database add supplemental log data; Database add SUPPLEMENTAL altered. test> exec dbms_capture_adm.prepare_table_instantiation('testmig.test_tab_1','keys') PL/SQL procedure successfully completed. test> [/code] It was not enough and I got the error. By default you are not getting logging for your task but only configuration and statistics about replicated and loaded objects. As a result if you get an error, it is not clear where to look. I enabled supplemental logging for primary key on my replicated table and recreated task checking and logging checkbox. I got error again but I had a log and was able to see what was causing the issue. [code lang="text"] 2016-03-16T19:41:11 [SOURCE_CAPTURE ]I: Oracle compatibility version is 12.1.0.0.0 (oracle_endpoint_conn.c:86) 2016-03-16T19:41:11 [SOURCE_CAPTURE ]I: Oracle capture start time: now (oracle_endpoint_capture.c:701) 2016-03-16T19:41:12 [SOURCE_CAPTURE ]I: New Log Miner boundaries in thread '1' : First REDO Sequence is '4', Last REDO Sequence is '4' (oracdc_reader.c:589) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]W: Supplemental logging is not defined for table with no key 'TESTMIG.TEST_TAB_1' (oracle_endpoint_utils.c:831) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]E: Supplemental logging for table 'TESTMIG.TEST_TAB_1' is not enabled properly [122310] Supplemental logging is not correct (oracle_endpoint_unload.c:245) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]I: Unload finished for table 'TESTMIG'.'TEST_TAB_1' (Id = 1). 0 rows sent. (streamcomponent.c:2567) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]E: Failed to init unloading table 'TESTMIG'.'TEST_TAB_1' [122310] Supplemental logging is not correct (oracle_endpoint_unload.c:441) [/code] It looked like my supplemental logging was not enough. So, I added supplemental logging for all columns and for entire schema testmig. I recreated task and started it again. [code lang="sql"] test> exec dbms_capture_adm.prepare_table_instantiation('testmig.test_tab_1','all'); PL/SQL procedure successfully completed. test> exec dbms_capture_adm.prepare_schema_instantiation('testmig'); PL/SQL procedure successfully completed. test> [/code] It was working fine and was able to perform initial load. [code lang="text"] 2016-03-16T19:49:19 [SOURCE_CAPTURE ]I: Oracle capture start time: now (oracle_endpoint_capture.c:701) 2016-03-16T19:49:20 [SOURCE_CAPTURE ]I: New Log Miner boundaries in thread '1' : First REDO Sequence is '4', Last REDO Sequence is '4' (oracdc_reader.c:589) 2016-03-16T19:49:31 [SOURCE_UNLOAD ]I: Unload finished for table 'TESTMIG'.'TEST_TAB_1' (Id = 1). 100723 rows sent. (streamcomponent.c:2567) 2016-03-16T19:49:31 [TARGET_LOAD ]I: Load finished for table 'TESTMIG'.'TEST_TAB_1' (Id = 1). 100723 rows received. 0 rows skipped. Volume transfered 45929688 (streamcomponent.c:2787) [/code] What about ongoing changes? Yes, it was keeping the replication on and the tables were in sync. Replication lag for my case was minimal but we need to note that it was just one table with a low transaction rate. By the end I switched my load to AWS RDS database, stopped and deleted the DMS task. Migration was completed. I compared data in tables running a couple of simple checks for count and rows and running also one table "minus" other. Everything was fine. [code lang="sql"] rdsorcl> select max(pk_id) from testmig.test_tab_1; MAX(PK_ID) ---------------- 1000843 rdsorcl> select * from testmig.test_tab_1 where pk_id=1000843; PK_ID RND_STR_1 USE_DATE RND_STR_2 ACC_DATE ---------------- --------------- --------------------------- --------------- --------------------------- 1000843 OUHRTHQ8 02/11/13 07:27:44 NFIAODAU 05/07/15 03:49:29 rdsorcl> ---------------- test> select max(pk_id) from testmig.test_tab_1; MAX(PK_ID) ---------------- 1000843 test> select * from testmig.test_tab_1 where pk_id=1000843; PK_ID RND_STR_1 USE_DATE RND_STR_2 ACC_DATE ---------------- --------------- --------------------------- --------------- --------------------------- 1000843 OUHRTHQ8 02/11/13 07:27:44 NFIAODAU 05/07/15 03:49:29 test> test> select count(*) from (select * from test_tab_1 minus select * from test_tab_1@rdsorcl); COUNT(*) ---------------- 0 test> [/code] A summary of DMS:

The test for target endpoint failed with the same timeout, but the reason was totally different. It was not DNS, but rather a connection issue. At first, I couldn't figure that out because I was able to connect to my RDS instance from my laptop using server name and port but test endpoint in DMS was not working. Eventually I figured out that the problem was in security groups for endpoint in RDS. By default the AWS RDS instance was created with security group allowing connections outside but somehow restricting connections from DMS. I changed the security group for AWS RDS to "default" and was able to successfully test the endpoint in DMS. The next step was to create a task. I created a task with initial load and ongoing replication for my testmig schema. The task was supposed to drop any tables on the target (you can choose truncate instead if you want) create objects, move data and keep replication until cutover day when you will be able to switch your applications to the new database. It will tell you that you need to setup supplemental logging for replication. Unfortunately it doesn't tell you what kind of supplemental logging you have to setup. So, I enabled minimal data supplemental logging on my Azure test instance. [code lang="sql"] test> alter database add supplemental log data; Database add SUPPLEMENTAL altered. test> exec dbms_capture_adm.prepare_table_instantiation('testmig.test_tab_1','keys') PL/SQL procedure successfully completed. test> [/code] It was not enough and I got the error. By default you are not getting logging for your task but only configuration and statistics about replicated and loaded objects. As a result if you get an error, it is not clear where to look. I enabled supplemental logging for primary key on my replicated table and recreated task checking and logging checkbox. I got error again but I had a log and was able to see what was causing the issue. [code lang="text"] 2016-03-16T19:41:11 [SOURCE_CAPTURE ]I: Oracle compatibility version is 12.1.0.0.0 (oracle_endpoint_conn.c:86) 2016-03-16T19:41:11 [SOURCE_CAPTURE ]I: Oracle capture start time: now (oracle_endpoint_capture.c:701) 2016-03-16T19:41:12 [SOURCE_CAPTURE ]I: New Log Miner boundaries in thread '1' : First REDO Sequence is '4', Last REDO Sequence is '4' (oracdc_reader.c:589) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]W: Supplemental logging is not defined for table with no key 'TESTMIG.TEST_TAB_1' (oracle_endpoint_utils.c:831) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]E: Supplemental logging for table 'TESTMIG.TEST_TAB_1' is not enabled properly [122310] Supplemental logging is not correct (oracle_endpoint_unload.c:245) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]I: Unload finished for table 'TESTMIG'.'TEST_TAB_1' (Id = 1). 0 rows sent. (streamcomponent.c:2567) 2016-03-16T19:41:18 [SOURCE_UNLOAD ]E: Failed to init unloading table 'TESTMIG'.'TEST_TAB_1' [122310] Supplemental logging is not correct (oracle_endpoint_unload.c:441) [/code] It looked like my supplemental logging was not enough. So, I added supplemental logging for all columns and for entire schema testmig. I recreated task and started it again. [code lang="sql"] test> exec dbms_capture_adm.prepare_table_instantiation('testmig.test_tab_1','all'); PL/SQL procedure successfully completed. test> exec dbms_capture_adm.prepare_schema_instantiation('testmig'); PL/SQL procedure successfully completed. test> [/code] It was working fine and was able to perform initial load. [code lang="text"] 2016-03-16T19:49:19 [SOURCE_CAPTURE ]I: Oracle capture start time: now (oracle_endpoint_capture.c:701) 2016-03-16T19:49:20 [SOURCE_CAPTURE ]I: New Log Miner boundaries in thread '1' : First REDO Sequence is '4', Last REDO Sequence is '4' (oracdc_reader.c:589) 2016-03-16T19:49:31 [SOURCE_UNLOAD ]I: Unload finished for table 'TESTMIG'.'TEST_TAB_1' (Id = 1). 100723 rows sent. (streamcomponent.c:2567) 2016-03-16T19:49:31 [TARGET_LOAD ]I: Load finished for table 'TESTMIG'.'TEST_TAB_1' (Id = 1). 100723 rows received. 0 rows skipped. Volume transfered 45929688 (streamcomponent.c:2787) [/code] What about ongoing changes? Yes, it was keeping the replication on and the tables were in sync. Replication lag for my case was minimal but we need to note that it was just one table with a low transaction rate. By the end I switched my load to AWS RDS database, stopped and deleted the DMS task. Migration was completed. I compared data in tables running a couple of simple checks for count and rows and running also one table "minus" other. Everything was fine. [code lang="sql"] rdsorcl> select max(pk_id) from testmig.test_tab_1; MAX(PK_ID) ---------------- 1000843 rdsorcl> select * from testmig.test_tab_1 where pk_id=1000843; PK_ID RND_STR_1 USE_DATE RND_STR_2 ACC_DATE ---------------- --------------- --------------------------- --------------- --------------------------- 1000843 OUHRTHQ8 02/11/13 07:27:44 NFIAODAU 05/07/15 03:49:29 rdsorcl> ---------------- test> select max(pk_id) from testmig.test_tab_1; MAX(PK_ID) ---------------- 1000843 test> select * from testmig.test_tab_1 where pk_id=1000843; PK_ID RND_STR_1 USE_DATE RND_STR_2 ACC_DATE ---------------- --------------- --------------------------- --------------- --------------------------- 1000843 OUHRTHQ8 02/11/13 07:27:44 NFIAODAU 05/07/15 03:49:29 test> test> select count(*) from (select * from test_tab_1 minus select * from test_tab_1@rdsorcl); COUNT(*) ---------------- 0 test> [/code] A summary of DMS:

- We may need to adjust security groups for target RDS or EC2 systems. It may prevent connections.

- Better to use IP for source endpoints since DNS may be not reliable.

- Enable logging when you create task.

- If you enable replication from Oracle database you have to setup full supplemental logging for the replicated schemas on your source system.

- It requires basic knowledge about replication and how it works to understand and fix the error.

On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Fix: SSIS Catalog Creation Error – “SQL Server Denali is Required to Install Integration Services”

Fix: SSIS Catalog Creation Error – “SQL Server Denali is Required to Install Integration Services”

Mar 24, 2022 12:00:00 AM

1

min read

Fix: SSIS Installation Error – Rule “Existing clustered or clustered-prepared instance” Failed

Fix: SSIS Installation Error – Rule “Existing clustered or clustered-prepared instance” Failed

Mar 29, 2022 12:00:00 AM

1

min read

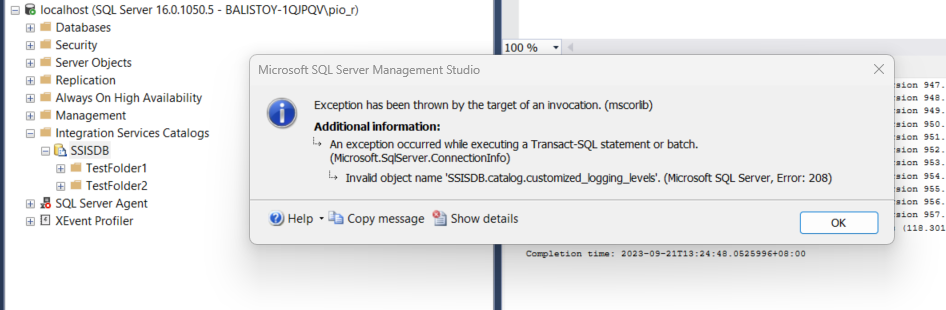

Fix: Invalid Object Name 'SSISDB.catalog.customized_logging_levels'

Fix: Invalid Object Name 'SSISDB.catalog.customized_logging_levels'

Oct 18, 2023 1:56:42 PM

3

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.