How to Secure Your Elastic Stack (Plus Kibana, Logstash and Beats)

Editor’s Note: Because our bloggers have lots of useful tips, every now and then we bring forward a popular post from the past. We originally published today’s post on December 16, 2019.

This is the second of a series of blog posts related to Elastic Stack and the components around it. The final objective is to deploy and secure a production-ready environment using these freely available tools.

In this post, I’ll be focusing on securing your elastic stack (plus Kibana, Logstash and Beats) using HTTPS, SSL and TLS. If you need to install an Elasticsearch cluster, please make sure to check out the first post which covered Installing Elasticsearch Using Ansible.

As I mentioned in the first post, one thing I find disturbing in this day and age is Elastic Stack’s default behavior. It’s always configured to have all the messages exchanged between the components in the stack in plain text! Of course, we always imagine the components are in a secure channel — the nodes of the cluster, the information shipping to them via Beats, etc. All of them should be on a private, secure network. The truth is, that’s not always the case.

We all heard the great news from the vendor, Elastic, a few months ago — starting with version 6.8.0 and 7.1.0, most of the security features on Elasticsearch are now free! Before this, we had to use X-Pack (paid) features. If we needed any secure communications between the components of our cluster, we had to pay. Fortunately, this is no more and now we have a way to both quickly deploy and secure our stack.

The bad news is that vendor documentation about securing it is still scarce. The good news is we have this blog post as a guide! :)

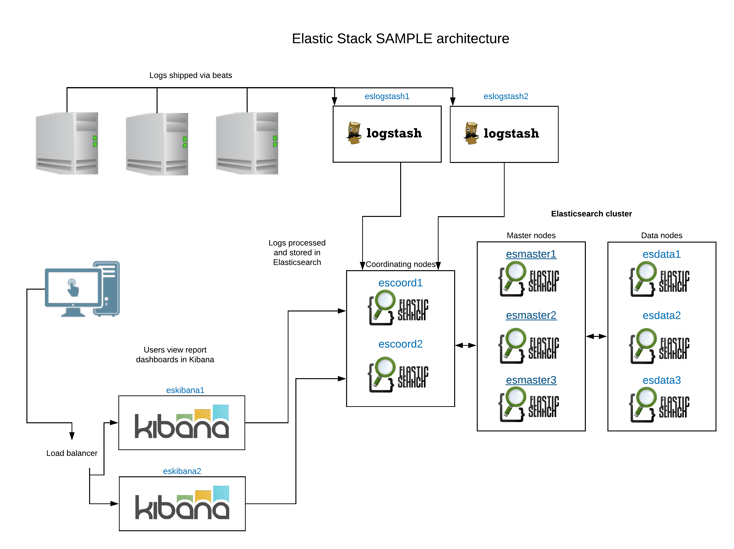

Sample Elastic Stack architecture

I’ll work on this post under the assumption the architecture is as it is in the following diagram. Of course, this will NOT be the case for your deployment, so please adjust the components as necessary. The diagram is just for information purposes.

Securing Elasticsearch cluster

First, we need to create the CA for the cluster:

/usr/share/elasticsearch/bin/elasticsearch-certutil ca

Then, it’s necessary to create the certificates for the individual components:

/usr/share/elasticsearch/bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12 --dns esmaster1,esmaster2,esmaster3,esdata1,esdata2,esdata3,escoord1,escoord2,eslogstash1,eslogstash2

You can create both certificates on any of the servers and they can be distributed afterward. (By default, under /usr/share/elasticsearch/, with the names of elastic-stack-ca.p12 (CA) and elastic-certificates.p12 certificates).

I recommend setting the certificates to expire at a future date. Three years would be a safe value. It’s just a matter of remembering when will they expire and renewing them beforehand.

There are some options that must be added to all of the nodes for the cluster, such as the following:

xpack.security.enabled: true xpack.security.transport.ssl.enabled: true xpack.security.transport.ssl.verification_mode: certificate xpack.security.transport.ssl.keystore.path: security/elastic-certificates.p12 xpack.security.transport.ssl.truststore.path: security/elastic-certificates.p12 xpack.security.http.ssl.enabled: true xpack.security.http.ssl.keystore.path: security/elastic-certificates.p12 xpack.security.http.ssl.truststore.path: security/elastic-certificates.p12 xpack.security.http.ssl.verification_mode: certificate

Remember how in my first post I recommended using Ansible to deploy the Elasticsearch cluster? Now is the time to use it to easily redeploy with the security options.

The updated Ansible configuration file is this:

- hosts: masters

roles:

- role: elastic.elasticsearch

vars:

es_heap_size: "8g"

es_config:

cluster.name: "esprd"

network.host: 0

cluster.initial_master_nodes: "esmaster1,esmaster2,esmaster3"

discovery.seed_hosts: "esmaster1:9300,esmaster2:9300,esmaster3:9300"

http.port: 9200

node.data: false

node.master: true

node.ingest: false

node.ml: false

cluster.remote.connect: false

bootstrap.memory_lock: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: security/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: security/elastic-certificates.p12

xpack.security.http.ssl.enabled: true

xpack.security.http.ssl.keystore.path: security/elastic-certificates.p12

xpack.security.http.ssl.truststore.path: security/elastic-certificates.p12

xpack.security.http.ssl.verification_mode: certificate

- hosts: data

roles:

- role: elastic.elasticsearch

vars:

es_data_dirs:

- "/var/lib/elasticsearch"

es_heap_size: "30g"

es_config:

cluster.name: "esprd"

network.host: 0

discovery.seed_hosts: "esmaster1:9300,esmaster2:9300,esmaster3:9300"

http.port: 9200

node.data: true

node.master: false

node.ml: false

bootstrap.memory_lock: true

indices.recovery.max_bytes_per_sec: 100mb

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: security/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: security/elastic-certificates.p12

xpack.security.http.ssl.enabled: true

xpack.security.http.ssl.keystore.path: security/elastic-certificates.p12

xpack.security.http.ssl.truststore.path: security/elastic-certificates.p12

xpack.security.http.ssl.verification_mode: certificate

- hosts: coordinating

roles:

- role: elastic.elasticsearch

vars:

es_heap_size: "16g"

es_config:

cluster.name: "esprd"

network.host: 0

discovery.seed_hosts: "esmaster1:9300,esmaster2:9300,esmaster3:9300"

http.port: 9200

node.data: false

node.master: false

node.ingest: false

node.ml: false

cluster.remote.connect: false

bootstrap.memory_lock: true

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: security/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: security/elastic-certificates.p12

xpack.security.http.ssl.enabled: true

xpack.security.http.ssl.keystore.path: security/elastic-certificates.p12

xpack.security.http.ssl.truststore.path: security/elastic-certificates.p12

xpack.security.http.ssl.verification_mode: certificate

If you didn’t deploy via Ansible, you can still add the options manually to the configuration file.

After adding the options and restarting the cluster, Elasticsearch will be accessible via https. You can check with https://esmaster1:9200/_cluster/health.

We need to create the default users and set up passwords for security on Elasticsearch. Again, this can be done on any of the Elasticsearch nodes.

/usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactive

The list of users will be similar to this one:

elastic: REDACTED apm-system: REDACTED kibana: REDACTED logstash_system: REDACTED beats_system: REDACTED remote_monitoring_user: REDACTED

Securing Kibana

After all security options are set on the Elastic cluster, we move into Kibana configuration. We will create a PEM format certificate and key with the following command:

/usr/share/elasticsearch/bin/elasticsearch-certutil cert --pem -ca elastic-stack-ca.p12 --dns eskibana1 /usr/share/elasticsearch/bin/elasticsearch-certutil cert --pem -ca elastic-stack-ca.p12 --dns eskibana2

Once done, we need to move the certificates into the corresponding Kibana nodes under /etc/kibana/.

The configuration file for Kibana needs to end up being similar to this one. We’ll focus only on the basic and security-related parts of it.

server.port: 5601 server.host: "0.0.0.0" server.name: "eskibana1" elasticsearch.hosts: ["https://esmaster1:9200"] elasticsearch.username: "kibana" elasticsearch.password: "REDACTED" server.ssl.enabled: true server.ssl.certificate: /etc/kibana/instance.crt server.ssl.key: /etc/kibana/instance.key elasticsearch.ssl.verificationMode: none xpack.security.encryptionKey: "REDACTED"

After restarting Kibana, you can now access it via https. For example, at https://eskibana1:5601/app/kibana/

Securing Logstash

Logstash security configuration requires the certificate to be on PEM (as opposed to PK12 for Elasticsearch and Kibana). This is an undocumented “feature” (requirement)!

We’ll convert the general PK12 certificate into PEM for Logstash certificates:

openssl pkcs12 -in elastic-certificates.p12 -out /etc/logstash/logstash.pem -clcerts -nokeys

-

- Obtain the key:

openssl pkcs12 -in elastic-certificates.p12 -nocerts -nodes | sed -ne '/-BEGIN PRIVATE KEY-/,/-END PRIVATE KEY-/p' > logstash-ca.key

- Obtain the CA:

openssl pkcs12 -in elastic-certificates.p12 -cacerts -nokeys -chain | sed -ne '/-BEGIN CERTIFICATE-/,/-END CERTIFICATE-/p' > logstash-ca.crt

- Obtain the node certificate:

openssl pkcs12 -in elastic-certificates.p12 -clcerts -nokeys | sed -ne '/-BEGIN CERTIFICATE-/,/-END CERTIFICATE-/p' > logstash.crt

- Obtain the key:

logstash-ca.crt to

es-ca.crt if / when required, or give any other desired name).

Then, we need to edit the Logstash output filters to reflect the new security settings:

input {

beats {

port => 5044

}

}

output {

elasticsearch {

ssl => true

ssl_certificate_verification => true

cacert => '/etc/logstash/logstash.pem'

hosts => ["esmaster1:9200","esmaster2:9200","esmaster3:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

user => "elastic"

password => "REDACTED"

}

}

As we can see, Logstash will now talk to Elasticsearch using SSL and the certificate we just converted.

Finally, we edit Logstash’s configuration file /etc/logstash/logstash.yml to be like the following (focus only on security-related parts of it):

path.data: /var/lib/logstash config.reload.automatic: true path.logs: /var/log/logstash xpack.monitoring.enabled: true xpack.monitoring.elasticsearch.username: logstash_system xpack.monitoring.elasticsearch.password: REDACTED xpack.monitoring.elasticsearch.hosts: ["https://esmaster1:9200", "https://esmaster2:9200", "https://esmaster3:9200"] xpack.monitoring.elasticsearch.ssl.certificate_authority: /etc/logstash/es-ca.crt xpack.monitoring.elasticsearch.sniffing: true xpack.monitoring.collection.interval: 10s xpack.monitoring.collection.pipeline.details.enabled: true

Restart Logstash to get the new settings on the file.

Taking a break — almost there!

(Phew, we’re almost there! We’re on the way to secure your Elastic Stack. Please just be a bit more patient. It’s going to be worth it for the security. Right? RIGHT?!)

Security on XKCD. Permission to use the image at https://xkcd.com/about/

Securing Beats — changes (still) on Logstash servers

We need to create a new certificate in order for Logstash to accept SSL connections from Beats. This certificate will be used only for communication between these two components of the stack. This certificate is also different than the one used for Logstash to communicate with the Elasticsearch cluster to send data. This is why the CA and the crt/key (in PEM format) are different.

Okay, let’s create the certificates!

/usr/share/elasticsearch/bin/elasticsearch-certutil cert --ca-cert logstash-ca/logstash-ca.crt --ca-key logstash-ca/logstash-ca.key --dns eslogstash1,eslogstash2 --pem openssl pkcs8 -in logstash.key -topk8 -nocrypt -out logstash.pkcs8.key

If you need to maintain both the plain text (but why?!) and the secure communication, there is an extra step. You will need to create two Logstash configurations, one for the plain text communication and another for the SSL one. The first input in plain text (incoming from Beats), output in SSL (to Elasticsearch cluster) is the one listed in the above section.

The new (secure) input (from Beats) + output (to Elasticsearch) configuration would be:

input {

beats {

port => 5045

ssl => true

ssl_certificate_authorities => ["/etc/logstash/ca.crt"]

ssl_certificate => "/etc/logstash/instance.crt"

ssl_key => "/etc/logstash/logstash.pkcs8.key"

}

}

output {

elasticsearch {

ssl => true

ssl_certificate_verification => true

cacert => '/etc/logstash/logstash.pem'

hosts => ["esmaster1:9200","esmaster2:9200","esmaster3:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

user => "elastic"

password => "REDACTED"

}

}

}

Notice that regular logs (plain text) can come on port 5044/tcp, but SSL logs come into port 5045/tcp. Adjust the port number if you need to.

Securing Beats — changes (finally!) on Beats shipping instances

Once the Logstash configuration is ready, it’s just a matter of setting the certificates on the Beats side. Easy as pie!

This is an example of the Metricbeat configuration. Please focus on the security part of it. These same certificates can be applied to Filebeat and any other beat!

metricbeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: true

reload.period: 10s

setup.template.settings:

index.number_of_shards: 1

index.codec: best_compression

name: my-happy-metricbeat-shipper-name

fields:

service: myimportantservice

output.logstash:

hosts: ["eslogstash1:5045"]

ssl.certificate_authorities: ["/etc/metricbeat/logstash-ca.crt"]

ssl.certificate: "/etc/metricbeat/logstash.crt"

ssl.key: "/etc/metricbeat/logstash.pkcs8.key"

Restart Logstash and corresponding beat(s) and that’s it!

Now you have a completely secure Elastic Stack (including Elasticsearch, Kibana, Logstash and Beats). You can be really proud of it because this is not a trivial task!

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Installing Elasticsearch Using Ansible – the Quick Way!

Amazon EFS Top-Talkers

Updating Elasticsearch indexes with Spark

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.