Exploring the operations log in MongoDB

What is the MongoDB Replication Window?

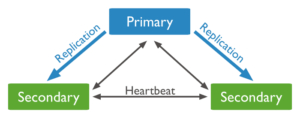

If you are from the MySQL world, you are probably already familiar with master-slave replication in its basics. There are binary logs on the master used for writing the replication events. The same logs are copied on all slaves connected to the master as relay logs using Slave_IO thread . The slave executes the events from the relay logs using Slave_SQL thread . The replication is done asynchronously. The slave can stop replicating any time and will continue from the last position it stopped. There is expire_logs_days variable on the master that can be changed dynamically if we want to keep more binary logs to extend the replication window. The slave server does not need binary logs enabled for the replication to work. Similar to binary logs, in MongoDB there is the operations log, called oplog, that is used for replication. If you check in the MongoDB docs, you will find that the oplog is a special capped collection that keeps a rolling record of all operations that modify the data stored in your databases. MongoDB applies database operations on the primary and then records the operations on the primary’s oplog. The secondary members then copy and apply these operations in an asynchronous process. All replica set members contain a copy of the oplog, in the local.oplog.rs collection, which allows them to maintain the current state of the database. This is different than MySQL replication, and the oplog collection must exist on all members from the replica set. Additionally, there is no option for adding filters in the replication: all nodes from the replica set must have the same dataset. Any member can import oplog entries from any other member. It’s a great feature in MongoDB for automatic failover where any member can become Primary (priority 0 nodes and hidden nodes can not become Primary). How quickly the capped oplog collection fills up with changes is the replication window. The oplog collection is stored in the local database and its initial size is calculated as shown: By default, the size of the oplog is as follows:- For 64-bit Linux, Solaris, FreeBSD, and Windows systems, MongoDB allocates 5% of the available free disk space, but will always allocate at least 1 gigabyte and never more than 50 gigabytes.

- For 64-bit OS X systems, MongoDB allocates 183 megabytes of space to the oplog.

- For 32-bit systems, MongoDB allocates about 48 megabytes of space to the oplog.

Updates to Multiple Documents at Once

The oplog must translate multi-updates into individual operations in order to maintain idempotency. Idempotency means that the operation will produce the same result given the same input, whether run once or run multiple times. You may run a single statement like db.products.update({count: {$lte : 100}},{$set:{a:5}},{multi:true}) but in fact it might generate thousands of oplog entries if that many documents met the condition { count: {$lte : 100}} . A single update statement must be translated to update each individual document.Deletions Equal the Same Amount of Data as Inserts

If you delete roughly the same amount of data as you insert, the database will not grow significantly in disk usage, but the size of the oplog can be quite large.Significant Number of In-Place Updates

If a significant portion of the workload is updates that do not increase the size of the documents, the database records a large number of operations but does not change the size of data on disk. In our case, the client had all three scenarios for generating huge oplog size. But here comes the interesting part. On all of the replica set members, the oplog size was changed to 80GB. The expectation was that there should be a large replication window having in mind the extended oplog size. But that was not the case. Unfortunately, the database was still using 10GB oplog size and there was 2.5 hours replication window only. Changing the oplog size on a running replica set is a complex operation, so it is important to verify the size of the configured oplog vs what the running mongod process thinks it is. The replication window size can be checked on the running mongod instance by typing the command. [code] replica1:PRIMARY> db.getReplicationInfo() { "logSizeMB" : 81920.00329589844, "usedMB" : 81573.46, "timeDiff" : 74624, "timeDiffHours" : 20.73, "tFirst" : "Sun May 29 2016 15:30:41 GMT+0000 (UTC)", "tLast" : "Mon May 30 2016 12:14:25 GMT+0000 (UTC)", "now" : "Mon May 30 2016 12:14:25 GMT+0000 (UTC)" } replica1:PRIMARY> [/code] In the example above, we can see the oplog size is 80GB while maintaining replication window of 20 hours. Be aware that it can differ if run on different times of the day. With 10GB oplog size = 2,5 hours replication window, the Secondary node lost the last oplog entry and was not able to continue the replication. The only solution in this case is to resync the node. What is great in MongoDB is the re-joining of stale nodes or adding new nodes to the replica set is done by only adding the node to the replica set. When adding new node, we only need to run the command rs.add(“hostname:port”) on the Primary and the initial sync will start automatically. The content in dbpath must be empty.How do you change the oplog size?

Because the oplog was not changed to 80GB, it was only changed in the config file, we had to use the procedure explained below for changing the oplog size on all nodes from the replica set: Shutdown the Secondary node. Connect to mongo shell and run [code] use admin db.shutdownServer() [/code] Restart the node with different port and without replSetName option. Take backup of the current oplog collection. This step is only for precaution. [code] mongodump --db local --collection 'oplog.rs' --out /path/to/dump [/code] Recreate the oplog collection with new size (In this case we are creating 80GB oplog) [code] use local db.temp.drop() db.temp.save( db.oplog.rs.find( { }, { ts: 1, h: 1 } ).sort( {$natural : -1} ).limit(1).next() ) db.oplog.rs.drop() db.runCommand( { create: "oplog.rs", capped: true, size: (80 * 1024 * 1024 * 1024) } ) db.oplog.rs.save( db.temp.findOne() ) [/code] Shutdown the instance and restart the node with replSetName. Update the config file with oplogSizeMB: <int> variable. Run the command db.getReplicationInfo() on the node and confirm the oplog size was changed Repeat on all nodes where you want to change the oplog size

Improvements for WiredTiger in MongoDB 3.0

If you are running Wired Tiger in MongoDB 3.0+ and have compression enabled, then with the same oplog size there will be larger replication window.Conclusion

Changing the oplog size takes more than modifying the configuration variable oplogSizeMB: <int> and restarting the process. It’s not as simple as set global expire_logs_days = X; dynamic change in MySQL and updating the config file. It can be done with almost zero downtime if we use the rolling upgrade procedure. It involves stopping each Secondary node as standalone and changing the oplog size. Once changed it can be added to the replica set again. At the end the Primary should be step down and the same changes to be applied. The procedure must finish for less than the active replication window, or else we are breaking the replication again. At different times of the day, you may have larger replication window depending on the writes you have to the database. Be sure that you monitor the oplog size and how large the replication window is. If you don’t have monitoring on the replica set, one network partition may cause the Secondary nodes to go out of sync. If you have a replica set deployment with slaveDelay it’s important to have longer replication window to keep all members catching up. A final warning: having a couple hundred GB of data may take a long time for initial sync. In some cases it's better to restore the node from backup and re-join to the replica set. The node will just need to apply last oplog entries.On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Monitoring Cassandra with Grafana and Influx DB

![]()

Monitoring Cassandra with Grafana and Influx DB

Mar 16, 2015 12:00:00 AM

4

min read

Deploying Azure Kubernetes cluster with container health monitoring service

![]()

Deploying Azure Kubernetes cluster with container health monitoring service

Jun 8, 2018 12:00:00 AM

8

min read

A look at news from Amazon Web Services: a backtrack feature and simplified authentication

![]()

A look at news from Amazon Web Services: a backtrack feature and simplified authentication

Jul 11, 2018 12:00:00 AM

4

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.