One of the key features of Exadata V2 is the flash cache. Although commonly thought of as an OLTP-specific feature, it has also been marketed as a data warehouse accelerator. According to this frequently-used presentation slide, a full Exadata rack provides 21 GB/sec of disk throughput and 50GB/sec of flash throughput.

(Note to readers: I’m referring to the Exadata smart flash cache, not the 11gR2 database flash cache/extended SGA, which is an altogether different animal)

I was testing throughput using a simple query, making use of both smart scans and parallel execution. Here’s what the objects look like. They’re running on a quarter rack system with a stated capacity of 4.5GB/sec disk and 11GB/sec flash.

SQL> desc ckbig; Name Null? Type ------------------------------------------------------------------------ -------- ------------------------------------------------- ID NUMBER FILLER VARCHAR2(3900) SQL> explain plan for select count(*) from ckbig; Explained. SQL> set lines 132 SQL> SELECT * FROM TABLE( dbms_xplan.display); PLAN_TABLE_OUTPUT ------------------------------------------------------------------------------------------------------------------------------------ Plan hash value: 3990549585 ---------------------------------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Cost (%CPU)| Time | TQ |IN-OUT| PQ Distrib | ---------------------------------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 188K (1)| 00:37:43 | | | | | 1 | SORT AGGREGATE | | 1 | | | | | | | 2 | PX COORDINATOR | | | | | | | | | 3 | PX SEND QC (RANDOM) | :TQ10000 | 1 | | | Q1,00 | P->S | QC (RAND) | | 4 | SORT AGGREGATE | | 1 | | | Q1,00 | PCWP | | | 5 | PX BLOCK ITERATOR | | 44M| 188K (1)| 00:37:43 | Q1,00 | PCWC | | | 6 | TABLE ACCESS STORAGE FULL| CKBIG | 44M| 188K (1)| 00:37:43 | Q1,00 | PCWP | | ---------------------------------------------------------------------------------------------------------------- SQL> select trunc(sum(bytes)/1024/1024/1024)||'G' from dba_segments where segment_name = 'CKBIG'; TRUNC(SUM(BYTES)/1024/1024/1024)||'G' ----------------------------------------- 76G SQL> explain plan for select count(*) from ckbig; Explained. SQL> SELECT * FROM TABLE( dbms_xplan.display); PLAN_TABLE_OUTPUT ----------------------------------------------------------------------------------------------------------------------------------------Plan hash value: 3990549585 ---------------------------------------------------------------------------------------------------------------- | Id | Operation | Name | Rows | Cost (%CPU)| Time | TQ |IN-OUT| PQ Distrib | ---------------------------------------------------------------------------------------------------------------- | 0 | SELECT STATEMENT | | 1 | 188K (1)| 00:37:43 | | | | | 1 | SORT AGGREGATE | | 1 | | | | | | | 2 | PX COORDINATOR | | | | | | | | | 3 | PX SEND QC (RANDOM) | :TQ10000 | 1 | | | Q1,00 | P->S | QC (RAND) | | 4 | SORT AGGREGATE | | 1 | | | Q1,00 | PCWP | | | 5 | PX BLOCK ITERATOR | | 23M| 188K (1)| 00:37:43 | Q1,00 | PCWC | | | 6 | TABLE ACCESS STORAGE FULL| CKBIG | 23M| 188K (1)| 00:37:43 | Q1,00 | PCWP | | ----------------------------------------------------------------------------------------------------------------

Testing the query:

SQL> select name, value from v$mystat ms, v$statname sn where ms.statistic# = sn.statistic# and name in ('physical read total bytes','cell flash cache read hits');

NAME VALUE

---------------------------------------------------------------- ----------

physical read total bytes 0

cell flash cache read hits 0

SQL> set timing on

SQL> select count(*) from ckbig;

COUNT(*)

----------

20000000

Elapsed: 00:00:17.91

SQL> col value format 999999999999

SQL> select name, value from v$mystat ms, v$statname sn where ms.statistic# = sn.statistic# and name in ('physical read total bytes','cell flash cache read hits');

NAME VALUE

---------------------------------------------------------------- -------------

physical read total bytes 81921196032

cell flash cache read hits 0

SQL> select 81921196032/17.91/1024/1024/1024 as gbps from dual;

GBPS

----------

4.25991397

So in this test, we’re getting very close to the stated disk throughput (with some overhead associated with parallel query). But flash isn’t being used at all!

Looking through Oracle’s flash cache technical whitepaper, I found this paragraph in the section about pinning objects:

While the default behavior for sequential scans is to bypass the flash cache, this is not the case when KEEP is specified. If KEEP has been specified for an object, and it is accessed via an offloaded Smart Scan, the object is kept in and scanned from cache. Another advantage of the flash cache is that when an object that is kept in the cache is scanned, the Exadata software will simultaneously read the data from both flash and disk to get a higher aggregate scan rate than is possible from either source independently.

It would appear that one aspect of the flash path that is not automatically adjusting is the flash cache group, and by default the flash cache isn’t used by smart scans at all. There is a good reason for this though: when data sets are larger than the cache size, doing large reads through the cache can age out other cached objects, polluting the cache.

Repeating the test using the “KEEP” parameter:

SQL> alter table ckbig storage (cell_flash_cache keep);

Table altered.

SQL> select name, value from v$mystat ms, v$statname sn where ms.statistic# = sn.statistic# and name in ('physical read total bytes','cell flash cache read hits');

NAME VALUE

---------------------------------------------------------------- ----------

physical read total bytes 0

cell flash cache read hits 0

SQL> set timing on

SQL> select count(*) from ckbig;

COUNT(*)

----------

20000000

Elapsed: 00:00:22.31

SQL> col value format 999999999999

SQL> select name, value from v$mystat ms, v$statname sn where ms.statistic# = sn.statistic# and name in ('physical read total bytes','cell flash cache read hits');

NAME VALUE

---------------------------------------------------------------- -------------

physical read total bytes 81920647168

cell flash cache read hits 259

Slower: maybe overhead for populating the cache? There are virtually no cache read hits because there’s nothing in the cache. Running again, in a new session :

SQL> set timing on

SQL> select count(*) from ckbig;

COUNT(*)

----------

20000000

Elapsed: 00:00:06.63

SQL> col value format 999999999999

SQL> select name, value from v$mystat ms, v$statname sn where ms.statistic# = sn.statistic# and name in ('physical read total bytes','cell flash cache read hits');

NAME VALUE

---------------------------------------------------------------- -------------

physical read total bytes 81920516096

cell flash cache read hits 61209

Elapsed: 00:00:00.00

SQL> select 81920516096/6.63/1024/1024/1024 as gbps from dual;

GBPS

----------

11.5074549

This is actually slightly better than the stated rate of 11 GB/sec.

Confirming that the object is in the cache, by running CellCLI on a storage server:

CellCLI> list flashcachecontent where objectNumber = 115825 detail;

cachedKeepSize: 27370684416

cachedSize: 27409580032

dbID:

dbUniqueName: DBNAME

hitCount: 141814

hoursToExpiration: 24

missCount: 174408

objectNumber: 115825

tableSpaceNumber: 8

27409580032 * 3 cells = 76.6 G, very close to the 76.4G database segment size. So the entire object should be cached.

Running the query once more and getting numbers:

CellCLI> list flashcachecontent where objectNumber = 115825 detail;

cachedKeepSize: 27370684416

cachedSize: 27409580032

dbID:

dbUniqueName: DBNAME

hitCount: 161972

hoursToExpiration: 24

missCount: 180627

objectNumber: 115825

tableSpaceNumber: 8

So the delta is:

hitCount: 20158 (1/3 of total read hits reported at the DB server)

missCount: 6219 (24% of all reads)

total reads: 26377

26377 reads * 3 cells = 79131

79131 * 1024 * 1024 = 82974867456, just over the total read bytes, meaning that the read hit units are in megabytes

By extension, in the original query, there were 61209 flash cache read hits, or 60G. This leaves another 16G read off disk.

A quick summary:

- Objects must be marked as ‘CELL_FLASH_CACHE KEEP’ to be utilized by smart scans

- Once marked as such, reads involving the objects use both disk and flash

- Smart scans with flash assistance are about 2.5 times faster than from disk alone

- My actual measured disk+flash throughput of 11.5 GB/sec is slightly above the stated rate of 11

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

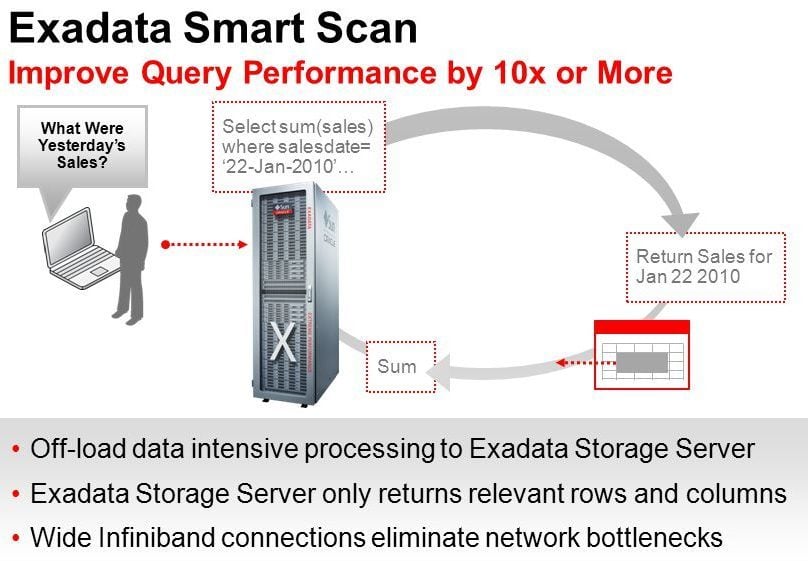

Exadata Smart Scan: A Quick Overview

Exadata TPC Benchmarks

Tips for Better Capacity Planning on Exadata

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.