101 Series: Oracle in Google Cloud – Part 2 : How I Built the GC VM with Ansible

Thanks for stopping by! I posted the first part of this series a few weeks ago, and was preparing to write part 2 which would discuss automation with Ansible. However, because there are very few blog posts about how Ansible works with GC Compute, and because this is a "101" series, I'll simply explain how to create the GC VM with Ansible in detail. This post is broken down into two parts:

Setup the gcloud Account, JSON File, and the SSH Keys

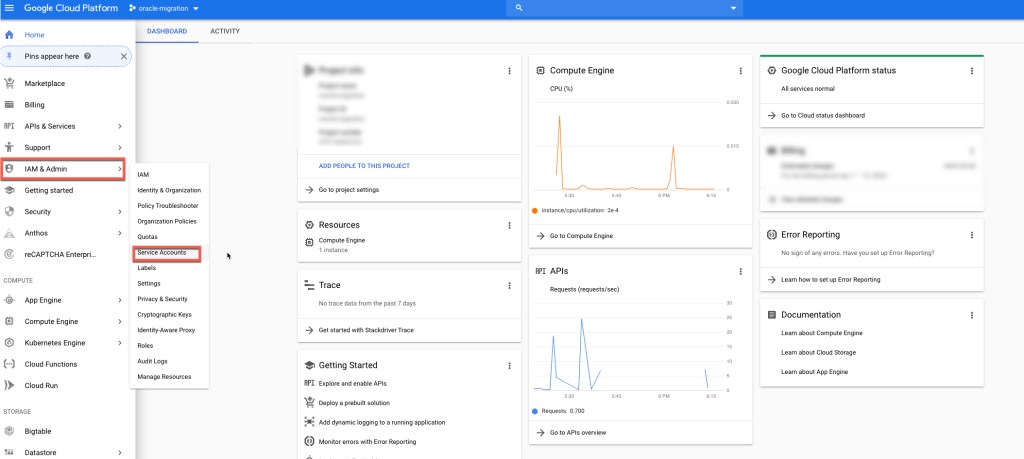

First, create the project’s service account that the gcloud CLI tool and Ansible will use to access and provision Compute resources within the project. Log into https://console.cloud.google.com/ and navigate to the project that you'll be using (in my case, this is oracle-migration). Here, add a new service account to the project. Go to IAM & admin -> Service Accounts, as shown below.

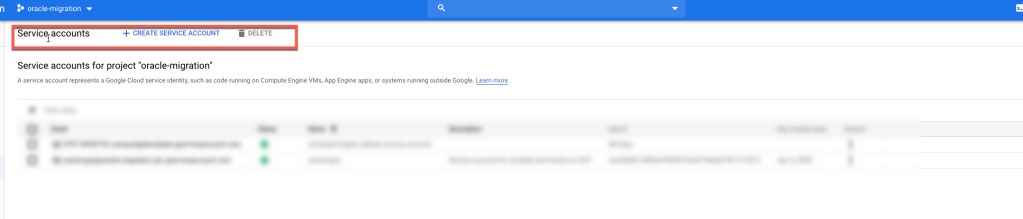

Click Create Service Account.

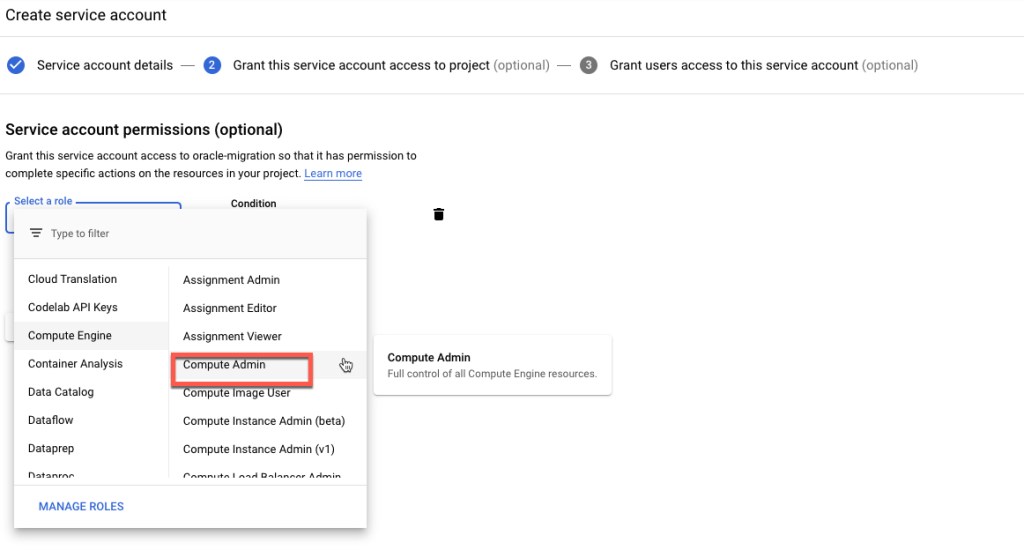

Enter the Service Account Name (I used oraclegcp). Then, click Create. After it's created, assign it the role of Compute Admin.

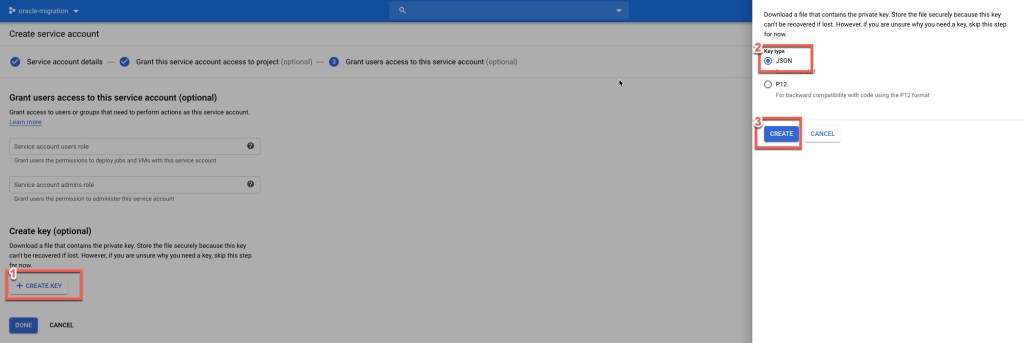

Create a service account private key. This private key is not the SSH key. This private key contains the credentials for the service account. Create it as a JSON file and save it in your local system. Remember this location as you'll use it within the Ansible project.

Create an RSA key for the oracle user on your local system, so that the user credentials are setup on your VM when it's created.

rene@Renes-iMac OracleOnGCP % ssh-keygen -t rsa -b 4096 -f ~/.ssh/oracle -C "oracle"

Generating public/private rsa key pair.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /Users/rene/.ssh/oracle.

Your public key has been saved in /Users/rene/.ssh/oracle.pub.

The key fingerprint is:

SHA256:***************** oracle

The key's randomart image is:

+---[RSA 4096]----+

| . .++ |

| E ..o . |

| ... |

| . ... |

| . +S.o.o. . |

| . B *o++.o o .|

| o .** o o . |

| =Xoo.... . |

| =o+ ooo |

+----[SHA256]-----+

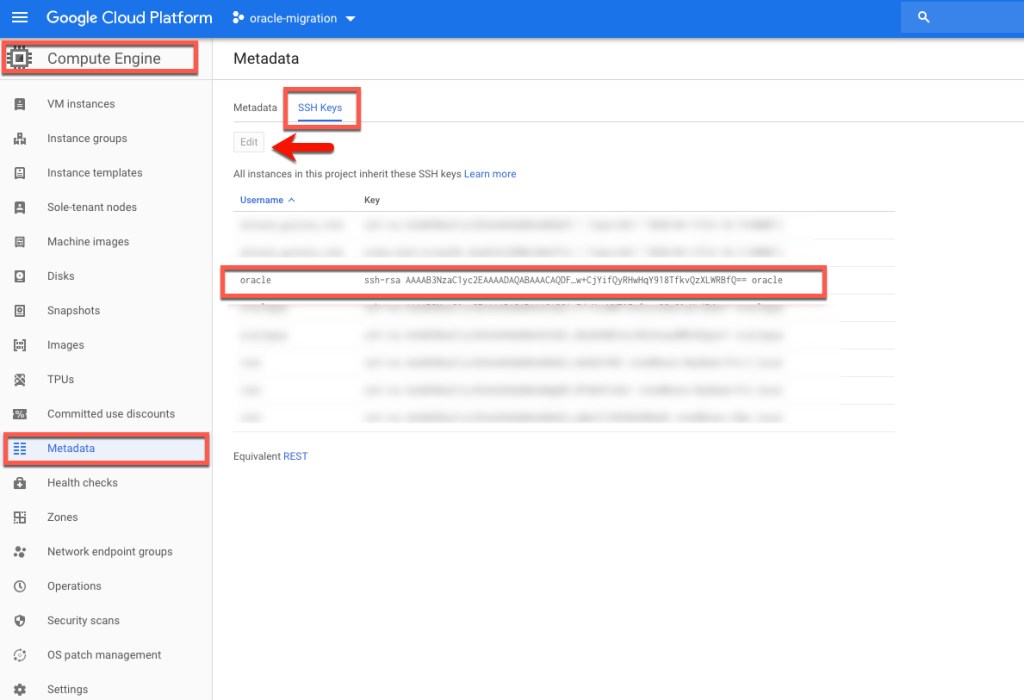

rene@Renes-iMac OracleOnGCP % cat ~/.ssh/oracle.pub | pbcopyAfter you create this key, copy the public key section (~/.ssh/oracle.pub) to your clipboard and add it to the metadata of the Compute Engine section. For this, go to Compute Engine -> Metadata -> SSH Keys -> Edit -> Add Item.

Before you install the ansible, requests, and google-auth packages, I recommend that you install Python 3 and the latest version of pip. If you are using OS X, click the link below:

With Python 3 and pip installed, install the ansible, requests, and google-auth packages.

pip install ansiblepip install requests google-auth

Here's what I have installed:

- Ansible: ansible 2.9.6

- pip: 20.0.2

- python : 3.7.7

Explaining the Ansible GC Compute Modules

I won't go into details about what Ansible is and how it's used. Instead, I'll explain the Ansible gcp modules.

You can find this project at: git clone https://github.com/rene-ace/OracleOnGCP

Make sure you go there (or clone it) before following the rest of this post. Keep in mind is that this playbook doesn't run against any hosts, so it has to be called on the localhost. Therefore, the ansible.cfg file is as shown below:

[defaults]

host_key_checking = False

roles_path = roles

inventory = inventories/hosts

remote_user = oracle

private_key_file = ~/.ssh/oracle

[inventory]

enable_plugins = host_list, script, yaml, ini, auto, gcp_computeThe hosts file in inventories/hosts is as follows:

[defaults]

inventory = localhost,I created this Ansible playbook with a role that includes tasks, and it's specified in /roles/gcp_instance/tasks/main.yml. This calls create.yml so that you can create the GCP Compute Instance (call delete.yml to delete your GCP Compute instance and environment). You can call the Ansible playbook, as shown below:

- To Create ansible-playbook -t create create_oracle_on_gcp.yml

- To Delete ansible-playbook -t delete create_oracle_on_gcp.yml

---

- import_tasks: create.yml

tags:

- create

- import_tasks: delete.yml

tags:

- deleteYou can find the variables I'm using for the GCP modules at roles/gcp_instance/vars/main.yml. Make sure to change them accordingly based on your environment.

--

# Common vars for Role gcp_instance_creation

# Set Accordingly to the recion and Zone you desire

gcp_project_name: "oracle-migration"

gcp_region: "us-central1"

gcp_zone: "us-central1-c"

gcp_cred_kind: "serviceaccount"

gcp_cred_file: "/Users/rene/Documents/GitHub/gcp_json_file/oracle-migration.json"

# Vars for task Task to create the ASM disk

gcp_asm_disk_name: "rene-ace-disk-asm1"

gcp_asm_disk_type: "pd-ssd"

gcp_asm_disk_size: "150"

gcp_asm_disk_labels: "item=rene-ace"

# Vars for task Task to create the Instance Boot disk

# We are creating a Centos 7 Instance

# Should you require a different image, change to the proper gcp_boot_disk_image

gcp_boot_disk_name: "rene-ace-inst1-boot-disk"

gcp_boot_disk_type: "pd-standard"

gcp_boot_disk_size: "100"

gcp_boot_disk_labels: "item=rene-ace"

gcp_boot_disk_image: "projects/centos-cloud/global/images/centos-7-v20200309"

# Vars for task Task to create the Oracle VM Instance

# Change accordingly to the Machine type that you desire

gcp_instance_name: "rene-ace-test-inst1"

gcp_machine_type: "n1-standard-8"

#Vars for task network creation and Firewall creation

gcp_network_name: "network-oracle-instances"

gcp_subnet_name: "network-oracle-instances-subnet"

gcp_firewall_name: "oracle-firewall"

gcp_ip_cidr_range: "172.16.0.0/16"

© 2020 GitHub, Inc.Create the boot disk with a Centos 7 image and the ASM disk for the OHAS installation we are performing. Remember how you register these disks, because you're going to use those names when you create the GCP VM instance.

# Creation of the Boot disk

- name: Task to create the Instance Boot disk

gcp_compute_disk:

name: ""

size_gb: ""

type: ""

source_image: ""

zone: ""

project: ""

auth_kind: ""

service_account_file: ""

scopes:

- https://www.googleapis.com/auth/compute

state: present

register: disk_boot

# Creation of the ASM disk

- name: Task to create the ASM disk

gcp_compute_disk:

name: ""

type: ""

size_gb: ""

zone: ""

project: ""

auth_kind: ""

service_account_file: ""

state: present

register: disk_asm_1Create the VPC Network, it's subnet, and an external IP address. Remember how you register them, because (for example) when you refer to network in the gcp_compute_subnetwork module, network is how you registered it in gcp_compute_network.

- name: Task to create a network

gcp_compute_network:

name: 'network-oracle-instances'

auto_create_subnetworks: 'true'

project: ""

auth_kind: ""

service_account_file: ""

scopes:

- https://www.googleapis.com/auth/compute

state: present

register: network

# Creation of a Sub Network

- name: Task to create a subnetwork

gcp_compute_subnetwork:

name: network-oracle-instances-subnet

region: ""

network: ""

ip_cidr_range: ""

project: ""

auth_kind: ""

service_account_file: ""

state: present

register: subnet

# Creation of the Network address

- name: Task to create a address

gcp_compute_address:

name: ""

region: ""

project: ""

auth_kind: ""

service_account_file: ""

scopes:

- https://www.googleapis.com/auth/compute

state: present

register: addressOpen port 22 on the firewall for the VM you're about to create, as well as the source ranges (this is a test environment). Allow access to all IP addresses. Remember how you setup your network tags (target_tags). This is very important, because if they're not set correctly you won't be able to SSH to your VM.

# Creation of the Firewall Rule

- name: Task to create a firewall

gcp_compute_firewall:

name: oracle-firewall

network: ""

allowed:

- ip_protocol: tcp

ports: ['22']

source_ranges: ['0.0.0.0/0']

target_tags:

- oracle-ssh

project: ""

auth_kind: ""

service_account_file: ""

scopes:

- https://www.googleapis.com/auth/compute

state: present

register: firewallAfter setting up the disks, network, and firewall, create the GCP VM instance. Assign both disks created above and the registered disk_boot. Specify true to use it in this VM. For disk_asm_1, specify false for boot. As shown below, assign the registered names created above (network, subnet, and address) for the network_interfaces.

The access_configs name and type are the only values accepted through the Ansible GCP module; That's why those are hard values. In the tags section, assign the same network tag created in the firewall module above so that you can connect to port 22 of the VM instance that you are creating.

# Creation of the Oracle Instance

- name: Task to create the Oracle Instance

gcp_compute_instance:

state: present

name: ""

machine_type: ""

disks:

- auto_delete: true

boot: true

source: ""

- auto_delete: true

boot: false

source: ""

network_interfaces:

- network: ""

subnetwork: ""

access_configs:

- name: External NAT

nat_ip: ""

type: ONE_TO_ONE_NAT

tags:

items: oracle-ssh

zone: ""

project: ""

auth_kind: ""

service_account_file: ""

scopes:

- https://www.googleapis.com/auth/compute

register: instanceAfter you create your GCP VM, verify and wait for SSH to establish and add the host to the ansible-playbook in-memory inventory.

- name: Wait for SSH to come up

wait_for: host= port=22 delay=10 timeout=60

- name: Add host to groupname

add_host: hostname= groupname=oracle_instancesIf everything is done correctly, run the playbook with the create task. You should see output similar to below.

rene@Renes-iMac OracleOnGCP % ansible-playbook -t create create_oracle_on_gcp.yml

[WARNING]: Unable to parse /Users/rene/Documents/GitHub/OracleOnGCP/inventories/hosts as an inventory source

[WARNING]: No inventory was parsed, only implicit localhost is available

[WARNING]: provided hosts list is empty, only localhost is available. Note that the implicit localhost does not match 'all'

PLAY [Playbook to create Oracle on Google Cloud] *********************************************************************************************************************************************************************************************************

TASK [gcp_instance : Task to create the Instance Boot disk] **********************************************************************************************************************************************************************************************

changed: [localhost]

TASK [gcp_instance : Task to create the ASM disk] ********************************************************************************************************************************************************************************************************

changed: [localhost]

TASK [gcp_instance : Task to create a network] ***********************************************************************************************************************************************************************************************************

changed: [localhost]

TASK [gcp_instance : Task to create a subnetwork] ********************************************************************************************************************************************************************************************************

changed: [localhost]

TASK [gcp_instance : Task to create a address] ***********************************************************************************************************************************************************************************************************

changed: [localhost]

TASK [gcp_instance : Task to create a firewall] **********************************************************************************************************************************************************************************************************

changed: [localhost]

TASK [gcp_instance : Task to create the Oracle Instance] *************************************************************************************************************************************************************************************************

changed: [localhost]

TASK [gcp_instance : Wait for SSH to come up] ************************************************************************************************************************************************************************************************************

ok: [localhost]

TASK [gcp_instance : Add host to groupname] **************************************************************************************************************************************************************************************************************

changed: [localhost]

PLAY RECAP ***********************************************************************************************************************************************************************************************************************************************

localhost : ok=9 changed=7 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

The last thing to do is to test the connectivity through SSH. You should now have your GCP VM.

rene@Renes-iMac OracleOnGCP % ssh -i ~/.ssh/oracle oracle@34.***.***.**8

The authenticity of host '34.***.***.**8 (34.***.***.**8)' can't be established.

ECDSA key fingerprint is *******************.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '34.***.***.**8' (ECDSA) to the list of known hosts.

-bash: warning: setlocale: LC_CTYPE: cannot change locale (UTF-8): No such file or directory

[oracle@rene-ace-test-inst1 ~]$ id

uid=1000(oracle) gid=1001(oracle) groups=1001(oracle),4(adm),39(video),1000(google-sudoers) context=unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023

As you can see, it's not that complicated. But not knowing aspects of the network tags or how Ansible uses the registered names can cause hours of troubleshooting. In the next post of this series, we'll automate the OHAS and DB creation.

Note: This was originally posted at rene-ace.com.

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

101 Series of Oracle in Google Cloud - Part I : Building ASM and Database

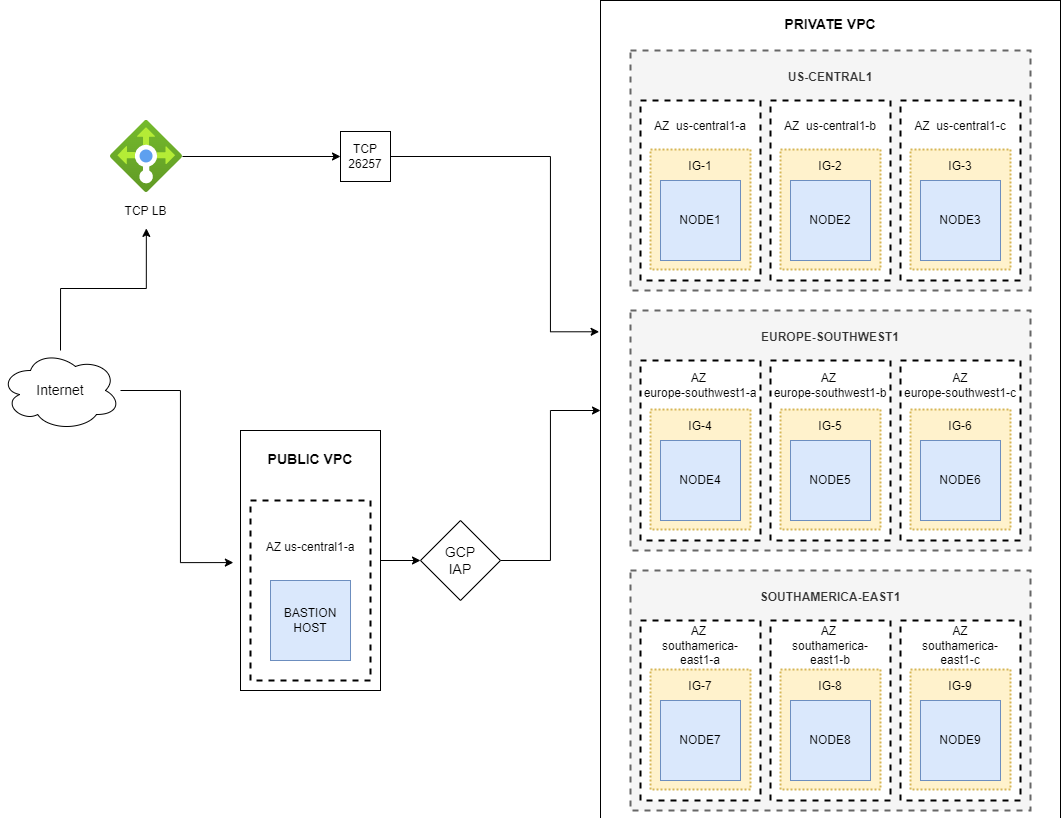

How to deploy a Cockroach DB Cluster in GCP? Part II

Using El Carro Operator on AWS

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.