Still have binlog_error_action as IGNORE_ERROR?

mysql> show master status; Empty set (0.00 sec) mysql> show binary logs; ERROR 1381 (HY000): You are not using binary loggingInterestingly, the MySQL slave was still reporting that everything was okay!

mysql> show slave status\G *************************** 1. row *************************** Slave_IO_State: Waiting for master to send event Master_Host: master Master_User: replicator Master_Port: 3306 Connect_Retry: 60 Master_Log_File: mysql-bin.000186 Read_Master_Log_Pos: 1069066810 Relay_Log_File: mysqld-relay-bin.000245 Relay_Log_Pos: 1069067004 Relay_Master_Log_File: mysql-bin.000186 Slave_IO_Running: Yes Slave_SQL_Running: Yes Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0 Last_Error: Skip_Counter: 0 Exec_Master_Log_Pos: 1069066810 Relay_Log_Space: 1069067296 Until_Condition: None Until_Log_File: Until_Log_Pos: 0 Master_SSL_Allowed: No Master_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0 Master_SSL_Verify_Server_Cert: No Last_IO_Errno: 0 Last_IO_Error: Last_SQL_Errno: 0 Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 23294781 Master_UUID: 7e2e60eb-39fb-11ea-a248-005056b589fd Master_Info_File: mysql.slave_master_info SQL_Delay: 0 SQL_Remaining_Delay: NULL Slave_SQL_Running_State: Slave has read all relay log; waiting for the slave I/O thread to update it Master_Retry_Count: 86400 Master_Bind: Last_IO_Error_Timestamp: Last_SQL_Error_Timestamp: Master_SSL_Crl: Master_SSL_Crlpath: Retrieved_Gtid_Set: 7e2e60eb-39fb-11ea-a248-005056b589fd:50961813-81101493 Executed_Gtid_Set: 2366bbb5-39fb-11ea-a246-005056b5f82f:1-818, 7e2e60eb-39fb-11ea-a248-005056b589fd:1-81101493 Auto_Position: 1 1 row in set (0.00 sec)Guess what my colleague saw in the error log?

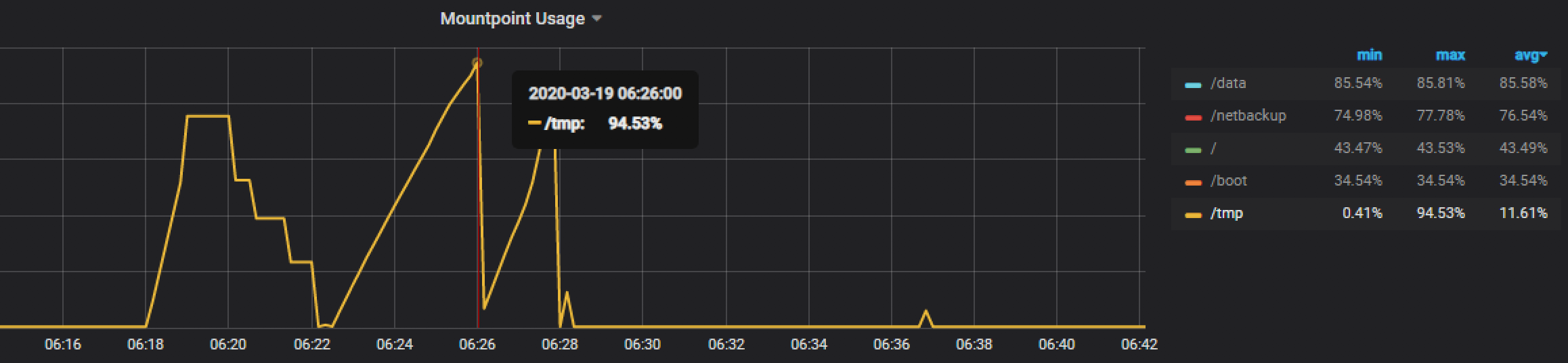

2020-03-18 07:23:50 14694 [ERROR] /usr/sbin/mysqld: Incorrect key file for table '/tmp/#sql_3966_2.MYI'; try to repair it 2020-03-18 07:23:50 14694 [ERROR] Got an error from unknown thread, /mnt/workspace/percona-server-5.6-redhat-binary-new/label_exp/min-centos-7-x64/test/rpmbuild/BUILD/percona-server-5.6.46-86.2/storage/myisam/mi_write.c:230 2020-03-18 14:10:46 14694 [Warning] IP address '192.168.0.10' could not be resolved: Name or service not known 2020-03-19 00:55:59 7f5589a9a700 InnoDB: Error: Write to file (merge) failed at offset 5678039040. InnoDB: 1048576 bytes should have been written, only 892928 were written. InnoDB: Operating system error number 28. InnoDB: Check that your OS and file system support files of this size. InnoDB: Check also that the disk is not full or a disk quota exceeded. InnoDB: Error number 28 means 'No space left on device'. InnoDB: Some operating system error numbers are described at InnoDB: http://dev.mysql.com/doc/refman/5.6/en/operating-system-error-codes.html 2020-03-19 00:57:59 7f5589a9a700 InnoDB: Error: Write to file (merge) failed at offset 3308257280. InnoDB: 1048576 bytes should have been written, only 888832 were written. InnoDB: Operating system error number 28. InnoDB: Check that your OS and file system support files of this size. InnoDB: Check also that the disk is not full or a disk quota exceeded. InnoDB: Error number 28 means 'No space left on device'. InnoDB: Some operating system error numbers are described at InnoDB: http://dev.mysql.com/doc/refman/5.6/en/operating-system-error-codes.html 2020-03-19 13:29:08 14694 [ERROR] /usr/sbin/mysqld: Incorrect key file for table '/tmp/#sql_3966_2.MYI'; try to repair it 2020-03-19 13:29:08 14694 [ERROR] Got an error from unknown thread, /mnt/workspace/percona-server-5.6-redhat-binary-new/label_exp/min-centos-7-x64/test/rpmbuild/BUILD/percona-server-5.6.46-86.2/storage/myisam/mi_write.c:230 2020-03-19 13:29:11 14694 [ERROR] /usr/sbin/mysqld: Incorrect key file for table '/tmp/#sql_3966_5.MYI'; try to repair it 2020-03-19 13:29:11 14694 [ERROR] Got an error from unknown thread, /mnt/workspace/percona-server-5.6-redhat-binary-new/label_exp/min-centos-7-x64/test/rpmbuild/BUILD/percona-server-5.6.46-86.2/storage/myisam/mi_write.c:230 2020-03-19 13:29:11 14694 [ERROR] /usr/sbin/mysqld: Incorrect key file for table '/tmp/#sql_3966_2.MYI'; try to repair it ... 2020-03-19 15:27:33 14694 [ERROR] Got an error from unknown thread, /mnt/workspace/percona-server-5.6-redhat-binary-new/label_exp/min-centos-7-x64/test/rpmbuild/BUILD/percona-server-5.6.46-86.2/storage/myisam/mi_write.c:230 2020-03-19 15:27:33 14694 [ERROR] /usr/sbin/mysqld: Incorrect key file for table '/tmp/#sql_3966_3.MYI'; try to repair it 2020-03-19 15:27:33 14694 [ERROR] Got an error from unknown thread, /mnt/workspace/percona-server-5.6-redhat-binary-new/label_exp/min-centos-7-x64/test/rpmbuild/BUILD/percona-server-5.6.46-86.2/storage/myisam/mi_write.c:230 2020-03-19 15:27:35 14694 [ERROR] An error occurred during flush stage of the commit. 'binlog_error_action' is set to 'IGNORE_ERROR'. Hence turning logging off for the whole duration of the MySQL server process. To turn it on again: fix the cause, shutdown the MySQL server and restart it.Further debugging of the issue with PMM, confirmed a spike on the /tmp disk. [caption id="attachment_107751" align="alignnone" width="2316"]

PMM Disk Check[/caption]

The environment here is an active-passive replication with the Orchestrator looking over them and doing its thing. It also has application traffic flowing through a ProxySQL cluster directed to the current active database.

PMM Disk Check[/caption]

The environment here is an active-passive replication with the Orchestrator looking over them and doing its thing. It also has application traffic flowing through a ProxySQL cluster directed to the current active database.

So, What's the Situation?

- We need to restart the master to fix this, as mentioned in the error-log.

- The master is no longer writing binary logs and therefore the slave is broken.

- Because the slave is broken, it's no longer able to take over an active position. No backups and no HA.

# Disable recoveries on Orchestrator: orchestrator-client -c disable-global-recoveries # Change database configuration add following variable: binlog_error_action=ABORT_SERVER # Restart MySQL # Verify error log & binary log # Enable global recoveries: orchestrator-client -c enable-global-recoveries # Take fresh backup on master # Restore passive-node (Slave) & reconfigure replication. # Raise /tmp disk partition more than largest table-size.

Bad Default?!

This option was introduced in MySQL 5.6.22 as a solution to the bug report about "mysqld continues operation without logging when binlogs cannot be written!" The default value was "IGNORE_ERROR". The alternate option is to crash the server with the value "ABORT_SERVER". Had it been ABORT_SERVER, we had our SWAT team of Orchestrator and ProxySQL to manage the HA. They'd have performed the failover to the passive-node. Then, the only thing left to resolve this issue is to verify the status of the aborted (crashed) MySQL database server, which, if running at all, would already be demoted to a passive position. Thankfully, this default is changed in MySQL 5.7.7 and later versions to ABORT_SERVER.A Task for You!

Here is something for you to do: For the EOL of MySQL 5.6, we still have almost a year to go. It's scheduled for February 2021. If you're using MySQL 5.6, go ahead and change the binlog_error_action variable. This is a dynamic change and won't affect anything in "live." Make sure you persist it in the MySQL config file.mysql> SET GLOBAL binlog_error_action=ABORT_SERVER;

Comment from a remote colleague

With everything going on in the world, we hope you're laying low and working from home. Pythian has been cultivating a remote-work culture for more than a decade. If you're struggling with the remote team and facing challenges (technical or not), it's possible we've already encountered them, and have a solution. Pythian is here to help. References :- https://bugs.mysql.com/bug.php?id=51014

- https://mysqlserverteam.com/new-option-to-stop-the-server-if-binlogging-fails-in-mysql-5-6/

- https://jira.percona.com/browse/PXC-2546

On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

When SHOW SLAVE STATUS and the error log Disagree

![]()

When SHOW SLAVE STATUS and the error log Disagree

Apr 25, 2008 12:00:00 AM

3

min read

InnoDB error: Total number of locks exceeds the lock table size

![]()

InnoDB error: Total number of locks exceeds the lock table size

May 19, 2011 12:00:00 AM

3

min read

When SHOW SLAVE STATUS lies

![]()

When SHOW SLAVE STATUS lies

Apr 6, 2008 12:00:00 AM

3

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.