Using El Carro Operator on AWS

Introduction

Google recently released a Kubernetes operator for Oracle, El Carro.

The project includes good examples of how it works on GCP and on your local computer (using minikube). Given it is a portable implementation, we wanted to give it a try on AWS deploying an Oracle XE database.

The changes from the original instructions are mainly to replace GCP services with the equivalent in AWS:

- Amazon Elastic Container Registry (ECR) instead of GCP Container Registry (GKR) to store the Docker image built for the database

- AWS CodeBuild instead of Google Cloud Build to execute the steps needed to create that image and push it to the repository

- Amazon Elastic Kubernetes Service (EKS) instead of Google Kubernetes Engine (GKE) to run the k8s cluster

- Amazon Elastic Block Store (EBS) Container Storage Interfase (CSI) driver instead of GCP Compute Engine persistent disk CSI Driver to handle persistent volumes inside k8s (ebs.csi.aws.com vs pd.csi.storage.gke.io provisioner), where data will be stored

This paper follows the steps performed using AWS services to deploy an Oracle XE database on k8s using the El Carro operator.

Note that this is just an example covering similar steps described in the 18c XE quickstart guide for GCP; it may not be the only way to make this work in AWS. Also, the names for the resources we create in AWS are the same as described in the GCP guide. We were looking to make it easy to follow and compare, but you may want to customize that to meet your needs—for example, GCLOUD as the container database name, gke as the dbDomain, csi-gce-pd storage class name, etc.

Preparation

I used an AWS account still in its first year with enough free services quota for most of the compute and storage needs of this test.

The first difference I found is that while you can run this test on GCP using Cloud Shell, the equivalent AWS CloudShell doesn’t support Docker containers. Looking to save time, we used a small EC2 instance (t2.micro) with the Amazon Linux 2 AMI image, where docker can be installed easily.

All steps below were executed as the ec2-user from within the EC2 instance:

1) Download the El Carro software:

$ wget https://github.com/GoogleCloudPlatform/elcarro-oracle-operator/releases/download/v0.0.0-alpha/release-artifacts.tar.gz $ tar -xzvf release-artifacts.tar.gz

That will create the directory v0.0.0-alpha in your working directory.

2) Install git and docker:

$ sudo amazon-linux-extras install docker $ sudo yum update -y $ sudo service docker start $ sudo usermod -a -G docker ec2-user $ reboot $ sudo yum install git

3) Configure the AWS client:

$ aws configure

Enter the following data from your account, which is available in your AWS console:

AWS Access Key ID [None]: <your AK id> AWS Secret Access Key [None]: <your SAK> Default region name [None]: <your region>

Prepare environment variables for config values that will be needed frequently: Zone and Account ID:

$ export ZONE=$(curl -s http://169.254.169.254/latest/meta-data/placement/availability-zone | sed 's/\(.*\)[a-z]/\1/') ; echo $ZONE $ export ACC_ID=$(aws sts get-caller-identity --query "Account") ; echo $ACC_ID

Creating a Containerized Oracle Database Image

The following steps create a docker container image and pushes it into an AWS ECR repository, automating the process using AWS CodeBuild,

1) Create a service role, needed for CodeBuild as described here:

$ vi create-service-role.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "codebuild.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

$ vi put-role-policy.json

$ cat put-role-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "CloudWatchLogsPolicy",

"Effect": "Allow",

"Action": [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

"Resource": [

"*"

]

},

{

"Sid": "CodeCommitPolicy",

"Effect": "Allow",

"Action": [

"codecommit:GitPull"

],

"Resource": [

"*"

]

},

{

"Sid": "S3GetObjectPolicy",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectVersion"

],

"Resource": [

"*"

]

},

{

"Sid": "S3PutObjectPolicy",

"Effect": "Allow",

"Action": [

"s3:PutObject"

],

"Resource": [

"*"

]

},

{

"Sid": "S3BucketIdentity",

"Effect": "Allow",

"Action": [

"s3:GetBucketAcl",

"s3:GetBucketLocation"

],

"Resource": [

"*"

]

},

{

"Action": [

"ecr:BatchCheckLayerAvailability",

"ecr:CompleteLayerUpload",

"ecr:GetAuthorizationToken",

"ecr:InitiateLayerUpload",

"ecr:PutImage",

"ecr:UploadLayerPart"

],

"Resource": "*",

"Effect": "Allow"

}

]

}

$ aws iam create-role --role-name CodeBuildServiceRole --assume-role-policy-document file://create-service-role.json

$ aws iam put-role-policy --role-name CodeBuildServiceRole --policy-name CodeBuildServiceRolePolicy --policy-document file://put-role-policy.json

2) Create an ECR repository. We are naming it elcarro:

$ aws ecr create-repository \

> --repository-name elcarro \

> --image-scanning-configuration scanOnPush=true \

> --region ${ZONE}

3) Create the build instructions file to create the container image and push it into the registry. We are also including in that directory the scripts used by those steps—Dockerfile and shell scripts called from there:

$ mkdir cloudbuild

$ cp v0.0.0-alpha/dbimage/* cloudbuild/

$ cd cloudbuild/

[cloudbuild]$ rm cloudbuild.yaml cloudbuild-18c-xe.yaml image_build.sh

[cloudbuild]$ vi buildspec.yml

[cloudbuild]$ cat buildspec.yml

version: 0.2

phases:

pre_build:

commands:

- echo Logging in to Amazon ECR...

- aws ecr get-login-password --region $AWS_DEFAULT_REGION | docker login --username AWS --password-stdin $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com

build:

commands:

- echo Build started on `date`

- echo Building the Docker image...

- docker build --no-cache --build-arg=DB_VERSION=18c --build-arg=CDB_NAME=GCLOUD --build-arg=CHARACTER_SET=$_CHARACTER_SET --build-arg=EDITION=xe -t $IMAGE_REPO_NAME:$IMAGE_TAG .

- docker tag $IMAGE_REPO_NAME:$IMAGE_TAG $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG

post_build:

commands:

- echo Build completed on `date`

- echo Pushing the Docker image...

- docker push $AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com/$IMAGE_REPO_NAME:$IMAGE_TAG

4) Prepare a zip with all those files:

[cloudbuild]$ zip ../elCarroImage.zip *

5) Create an S3 bucket and copy the zip file:

$ aws s3 mb s3://codebuild-${ZONE}-${ACC_ID}-input-bucket --region $ZONE

$ aws s3 --region $ZONE cp elCarroImage.zip s3://codebuild-${ZONE}-${ACC_ID}-input-bucket --acl public-read

upload: ./elCarroImage.zip to s3://codebuild-<ZONE>-<ACC_ID>-input-bucket/elCarroImage.zip

6) Prepare a build JSON file for this project – replace the strings <$ZONE> and <$ACC_ID> with their actual values, as this is a static file:

$ cat elcarro-ECR.json

{

"name": "elCarro",

"source": {

"type": "S3",

"location": "codebuild-<$ZONE>-<$ACC_ID>-input-bucket/elCarroImage.zip"

},

"artifacts": {

"type": "NO_ARTIFACTS"

},

"environment": {

"type": "LINUX_CONTAINER",

"image": "aws/codebuild/standard:4.0",

"computeType": "BUILD_GENERAL1_SMALL",

"environmentVariables": [

{

"name": "AWS_DEFAULT_REGION",

"value": "<$ZONE>"

},

{

"name": "AWS_ACCOUNT_ID",

"value": "<$ACC_ID>"

},

{

"name": "IMAGE_REPO_NAME",

"value": "elcarro"

},

{

"name": "IMAGE_TAG",

"value": "latest"

}

],

"privilegedMode": true

},

"serviceRole": "arn:aws:iam::<$ACC_ID>:role/CodeBuildServiceRole"

7) Create the build project:

$ aws codebuild create-project --cli-input-json file://elcarro-ECR.json

8) Finally, start the build:

$ aws codebuild start-build --project-name elCarro

To check progress from the CLI:

$ aws codebuild list-builds

{

"ids": [

"elCarro:59ef7722-06d6-4a1d-b2d5-14d967e9e4df"

]

}

We can see build details using the latest ID from the above output:

$ aws codebuild batch-get-builds --ids <elCarro:59ef7722-06d6-4a1d-b2d5-14d967e9e4df>

The build takes around 20 minutes. Once completed successfully, we can see the image in our ECR repo:

$ aws ecr list-images --repository-name elcarro

{

"imageIds": [

{

"imageTag": "latest",

"imageDigest": "sha256:7a2bd504abdf7c959332601b1deef98dda19418a252b510b604779a6143ec809"

}

]

}

Create a Kubernetes cluster

We need to install the CLI utils first:

$ curl -o kubectl https://amazon-eks.s3.us-west-2.amazonaws.com/1.20.4/2021-04-12/bin/linux/amd64/kubectl $ chmod +x ./kubectl $ mkdir -p $HOME/bin && cp ./kubectl $HOME/bin/kubectl && export PATH=$PATH:$HOME/bin $ echo 'export PATH=$PATH:$HOME/bin' >> ~/.bashrc $ kubectl version --short --client Client Version: v1.20.4-eks-6b7464 $ curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp $ sudo mv /tmp/eksctl /usr/local/bin $ eksctl version 0.55.0

Using bash variables for frequently used customized values will provide clarity to upcoming steps, in addition to ACC_ID and ZONE that were already set previously:

export PATH_TO_EL_CARRO_AWS=/home/ec2-user/elcarro-aws export PATH_TO_EL_CARRO_GCP=/home/ec2-user/v0.0.0-alpha export DBNAME=GCLOUD export CRD_NS=db export CLUSTER_NAME=gkecluster

We need to create a new ssh key for this test: myekskeys. This will be stored in the account, so there’s no need to repeat it if you deploy a second test in the future:

$ aws ec2 create-key-pair --region ${ZONE} --key-name myekskeys

Next, we’ll create a local file with the content of the private key, using output from the previous command:

$ vi myekskeys.priv

We are ready to create the cluster. It will take about 25 minutes to complete:

$ eksctl create cluster \

--name $CLUSTER_NAME \

--region $ZONE \

--with-oidc \

--ssh-access \

--ssh-public-key myekskeys \

--managed

These are basic checks to monitor the progress and resources as they are created:

aws eks list-clusters aws eks describe-cluster --name $CLUSTER_NAME eksctl get addons --cluster $CLUSTER_NAME kubectl get nodes --show-labels --all-namespaces kubectl get pods -o wide --all-namespaces --show-labels kubectl get svc --all-namespaces kubectl describe pod --all-namespaces kubectl get events --sort-by=.metadata.creationTimestamp

Add the EBS CSI driver

We need to use the AWS-EBS CSI driver:

$ cd ${PATH_TO_EL_CARRO_AWS}

$ git clone https://github.com/kubernetes-sigs/aws-ebs-csi-driver.git

Configuration files in El Carro project are set to use GCP storage; we need to adjust a few to use the AWS EBS driver. We will create new configuration files in the directory elcarro-aws/deploy/csi based on the original ones:

$ mkdir -p deploy/csi

$ cp ${PATH_TO_EL_CARRO_AWS}/deploy/csi/gce_pd_storage_class.yaml deploy/csi/aws_pd_storage_class.yaml

$ cp ${PATH_TO_EL_CARRO_AWS}/deploy/csi/gce_pd_volume_snapshot_class.yaml deploy/csi/aws_pd_volume_snapshot_class.yaml

$ vi deploy/csi/aws_pd_storage_class.yaml

$ cat deploy/csi/aws_pd_storage_class.yaml

# Copyright 2021 Google LLC

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-gce-pd

# provisioner: pd.csi.storage.gke.io

provisioner: ebs.csi.aws.com

parameters:

type: pd-standard

volumeBindingMode: WaitForFirstConsumer

$ vi deploy/csi/aws_pd_volume_snapshot_class.yaml

$ cat deploy/csi/aws_pd_volume_snapshot_class.yaml

# Copyright 2021 Google LLC

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotClass

metadata:

name: csi-gce-pd-snapshot-class

# driver: pd.csi.storage.gke.io

driver: ebs.csi.aws.com

deletionPolicy: Delete

$

The below policy is required only one time in your account, so there’s no need to re-execute this if you’re repeating cluster creation:

$ export OIDC_ID=$(aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.identity.oidc.issuer" --output text | rev | cut -d"/" -f1 | rev)

$ aws iam list-open-id-connect-providers | grep $OIDC_ID

$ aws iam create-policy \

--policy-name AmazonEKS_EBS_CSI_Driver_Policy \

--policy-document file://${PATH_TO_EL_CARRO_AWS}/aws-ebs-csi-driver/docs/example-iam-policy.json

The following steps are mandatory every time you need to configure EBS CSI:

$ eksctl create iamserviceaccount \

--name ebs-csi-controller-sa \

--namespace kube-system \

--cluster $CLUSTER_NAME \

--attach-policy-arn arn:aws:iam::<${ACC_ID}>:policy/AmazonEKS_EBS_CSI_Driver_Policy \

--approve \

--override-existing-serviceaccounts

$ export ROLE_ARN=$(aws cloudformation describe-stacks --stack-name eksctl-${CLUSTER_NAME}-addon-iamserviceaccount-kube-system-ebs-csi-controller-sa --query='Stacks[].Outputs[?OutputKey==`Role1`].OutputValue' --output text)

$ echo $ROLE_ARN

$ kubectl apply -k "github.com/kubernetes-sigs/aws-ebs-csi-driver/deploy/kubernetes/overlays/stable/?ref=master"

This is in AWS docs but seems to not be needed. It may have been necessary for an older release, so we’re keeping it just to be consistent with official docs:

$ kubectl annotate serviceaccount ebs-csi-controller-sa \

-n kube-system \

eks.amazonaws.com/role-arn=$ROLE_ARN

We are ready to add our storage class into the cluster:

$ kubectl create -f ${PATH_TO_EL_CARRO_AWS}/deploy/csi/aws_pd_storage_class.yaml

Now we’re completing the storage configuration and installing the volume snapshot class:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml $ kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml $ kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml $ kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml $ kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml

And now we add the storage class into the cluster:

$ kubectl create -f ${PATH_TO_EL_CARRO_AWS}/deploy/csi/aws_pd_volume_snapshot_class.yaml

At this point, the cluster is ready to deploy the operator.

A few basic sanity checks of our k8s cluster can be performed to compare later in case of any problem appears:

$ kubectl get storageclass --all-namespaces $ kubectl describe storageclass $ kubectl get pods -o wide --all-namespaces $ kubectl get events --sort-by=.metadata.creationTimestamp

Deploy El Carro operator and create the DB

We will use the code provided by the GCP project:

$ kubectl apply -f ${PATH_TO_EL_CARRO_GCP}/operator.yaml

Now we are ready to create an Oracle 18c XE database.

We took the original YAML file for this and updated it to use the proper URL for the DB image—the one we created a few steps ago. There’s no need to adjust the storageClass name, as we deployed to the cluster with the same name used by the GCP example but pointing to the correct AWS driver:

$ vi ${PATH_TO_EL_CARRO_AWS}/samples/v1alpha1_instance_18c_XE_express.yaml

$ cat ${PATH_TO_EL_CARRO_AWS}/samples/v1alpha1_instance_18c_XE_express.yaml

apiVersion: oracle.db.anthosapis.com/v1alpha1

kind: Instance

metadata:

name: mydb

spec:

type: Oracle

version: "18c"

edition: Express

dbDomain: "gke"

disks:

- name: DataDisk

storageClass: csi-gce-pd

- name: LogDisk

storageClass: csi-gce-pd

services:

Backup: true

Monitoring: false

Logging: false

HA/DR: false

images:

# Replace below with the actual URIs hosting the service agent images.

# service: "gcr.io/${PROJECT_ID}/oracle-database-images/oracle-18c-xe-seeded-${DB}"

service: "<${ACC_ID}>.dkr.ecr.us-east-2.amazonaws.com/elcarro:latest"

sourceCidrRanges: [0.0.0.0/0]

# Oracle SID character limit is 8, anything > gets truncated by Oracle

cdbName: GCLOUD

Now we continue with the steps described in the GCP guide to deploy the 18c XE database:

$ export CRD_NS=db

$ kubectl create ns ${CRD_NS}

$ kubectl get ns ${CRD_NS}

$ kubectl apply -f ${PATH_TO_EL_CARRO_AWS}/samples/v1alpha1_instance_18c_XE_express.yaml -n ${CRD_NS}

To monitor the progress of the deployment, we can check every component. The most relevant information is on the mydb-sts-0 pod:

kubectl get instances -n $CRD_NS kubectl get events --sort-by=.metadata.creationTimestamp kubectl get nodes kubectl get pods -n $CRD_NS kubectl get pvc -n $CRD_NS kubectl get svc -n $CRD_NS kubectl logs mydb-agent-deployment-<id> -c config-agent -n $CRD_NS kubectl logs mydb-agent-deployment-<id> -c oracle-monitoring -n $CRD_NS kubectl logs mydb-sts-0 -c oracledb -n $CRD_NS kubectl logs mydb-sts-0 -c dbinit -n $CRD_NS kubectl logs mydb-sts-0 -c dbdaemon -n $CRD_NS kubectl logs mydb-sts-0 -c alert-log-sidecar -n $CRD_NS kubectl logs mydb-sts-0 -c listener-log-sidecar -n $CRD_NS kubectl describe pods mydb-sts-0 -n $CRD_NS kubectl describe pods mydb-agent-deployment-<id> -n $CRD_NS kubectl describe node ip-<our-ip>.compute.internal

After a few minutes, the database instance (CDB) is created:

$ kubectl get pods -n $CRD_NS NAME READY STATUS RESTARTS AGE mydb-agent-deployment-6f7748f88b-2wlp9 1/1 Running 0 11m mydb-sts-0 4/4 Running 0 11m $ kubectl get instances -n $CRD_NS NAME DB ENGINE VERSION EDITION ENDPOINT URL DB NAMES BACKUP ID READYSTATUS READYREASON DBREADYSTATUS DBREADYREASON mydb Oracle 18c Express mydb-svc.db af8b37a22dc304391b51335848c9f7ff-678637856.us-east-2.elb.amazonaws.com:6021 True CreateComplete True CreateComplete

The last step is to deploy the database (PDB):

$ kubectl apply -f ${PATH_TO_EL_CARRO_GCP}/samples/v1alpha1_database_pdb1_express.yaml -n ${CRD_NS}

database.oracle.db.anthosapis.com/pdb1 created

After this completes, we can see instance status now includes the PDB:

$ kubectl get instances -n $CRD_NS NAME DB ENGINE VERSION EDITION ENDPOINT URL DB NAMES BACKUP ID READYSTATUS READYREASON DBREADYSTATUS DBREADYREASON mydb Oracle 18c Express mydb-svc.db af8b37a22dc304391b51335848c9f7ff-678637856.us-east-2.elb.amazonaws.com:6021 ["pdb1"] True CreateComplete True CreateComplete

We can connect to the database by either the TNS entry (af8b37a22dc304391b51335848c9f7ff-678637856.us-east-2.elb.amazonaws.com:6021/pdb1.gke) or directly to the container:

$ kubectl exec -it -n db mydb-sts-0 -c oracledb -- bash -i

$ . oraenv <<<GCLOUD

bash-4.2$ sqlplus / as sysdba

SQL*Plus: Release 18.0.0.0.0 - Production on Wed Jul 7 21:03:28 2021

Version 18.4.0.0.0

Copyright (c) 1982, 2018, Oracle. All rights reserved.

Connected to:

Oracle Database 18c Express Edition Release 18.0.0.0.0 - Production

Version 18.4.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

SQL> set lines 180 pages 180

SQL> select * from v$session_connect_info;

...

12 rows selected.

SQL> exit

Disconnected from Oracle Database 18c Express Edition Release 18.0.0.0.0 - Production

Version 18.4.0.0.0

bash-4.2$

Next steps

This setup is just the first step to provision an Oracle database into a k8s cluster on AWS EKS.

It is ready to use the features implemented by the operator, as backup using snapshots or RMAN, restore from a backup, or exporting and importing data using Data Pump.

We will explore that outside of GCP in upcoming blog posts.

Deleting resources after testing

After our tests are finished, we should delete the cluster to avoid charges if we don’t plan to continue using it:

$ kubectl get svc --all-namespaces

$ kubectl delete svc mydb-svc -n ${CRD_NS}

$ eksctl delete cluster --name $CLUSTER_NAME

Even if the output from the last command shows it completed successfully, check if that is true by inspecting the detailed output using the command below, as we found a couple of times “The following resource(s) failed to delete: [InternetGateway, SubnetPublicUSEAST2A, VPC, VPCGatewayAttachment, SubnetPublicUSEAST2B]. “ and had to delete those resources manually using the AWS console:

$ eksctl utils describe-stacks --region=us-east-2 --cluster=$CLUSTER_NAME

Conclusion

Since El Carro is based on open standards and technologies (such as Kubernetes), porting it to another cloud provider such as AWS isn’t that difficult. Though some minor changes are required, overall it’s not much of a departure from the GCP process. Maybe additional curated quickstart guides for other cloud providers will be created based on experiences like this, which could ease its adoption outside of GCP.

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

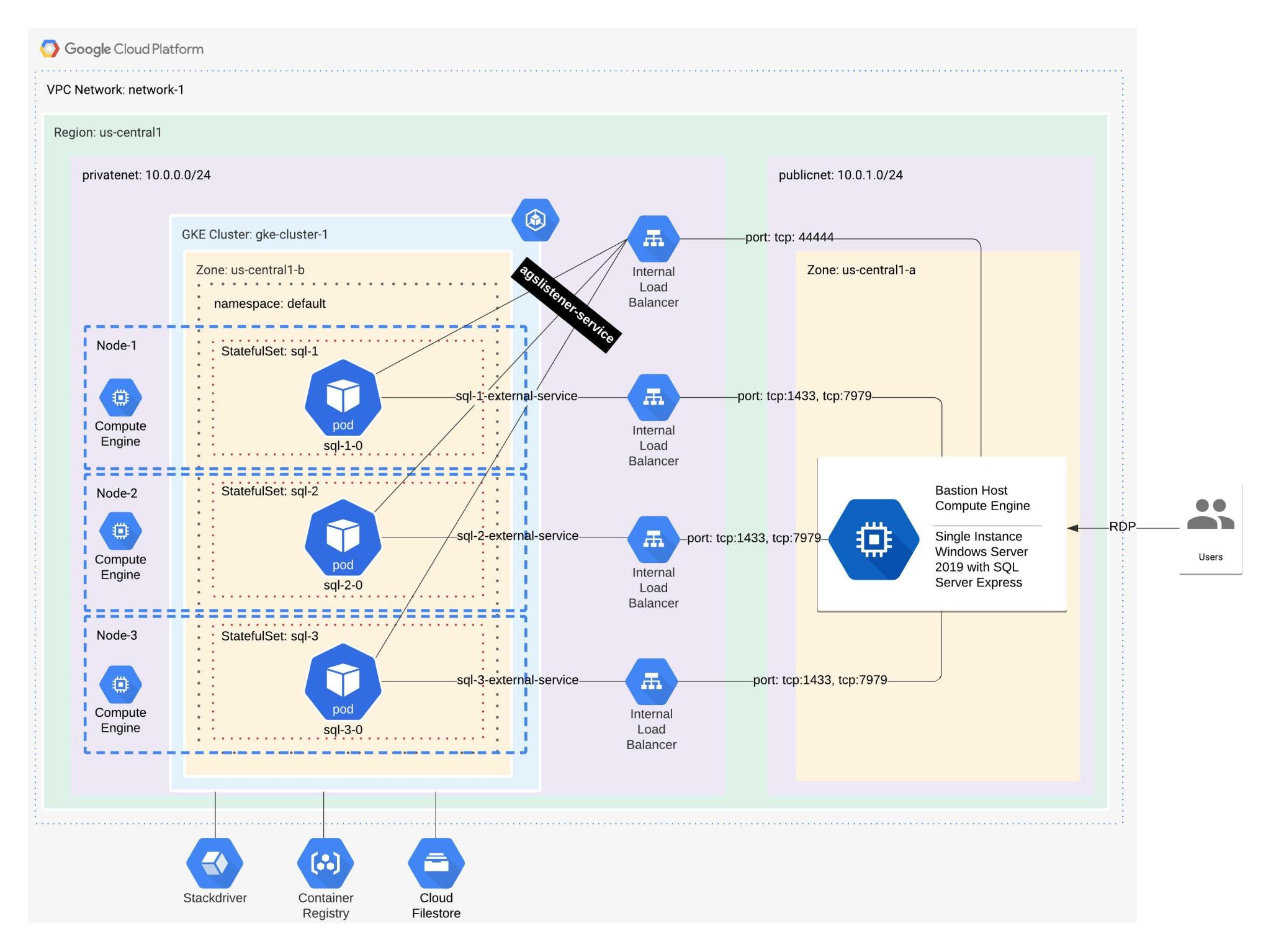

Running SQL Server 2019 on Google Kubernetes Engine

SQL Server AlwaysOn Availability Groups on Google Kubernetes Engine

How to delete an RAC Database Using DBCA silent mode

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.