Listener over Infiniband on Exadata (part 1)

Background

First of all, it is important to understand that InfiniBand (IB) is a computer-networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used as either a direct or switched interconnect between servers and storage systems, as well as an interconnect between storage systems. ( Wikipedia) Furthermore, we are going to enable the Sockets Direct Protocol (SDP) in the servers. Sockets Direct Protocol (SDP) is a standard communication protocol for clustered server environments, providing an interface between the network interface card and the application. By using SDP, applications place most of the messaging burden upon the network interface card, which frees the CPU for other tasks. As a result, SDP decreases network latency and CPU utilization, and thereby improves performance. ( Oracle Docs) Imagine you have an Exadata full rack. This means you have 14 storage servers (aka storage cells or cells), eight compute nodes (aka database servers or database nodes) and two (or three) InfiniBand Switches or, even better, you might have an Exadata with elastic configuration. Therefore, the number of compute nodes can be 18 and only three storage servers because you might need more compute power than storage capacity. The procedure and the case presented here can also be applied to SuperCluster, Exalytics, BDA or any other Oracle Engineered System (except ODA) you might have in your infrastructure.Contextualization

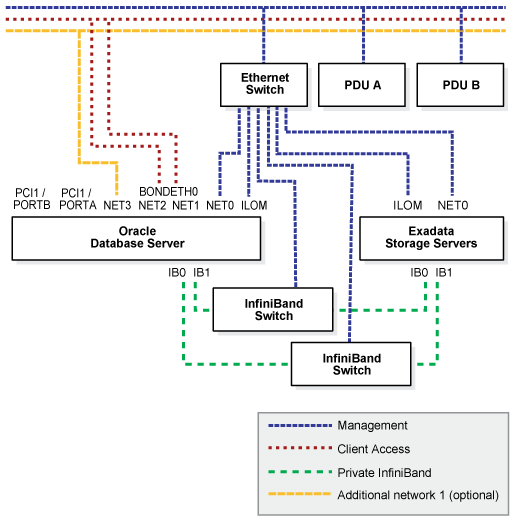

Now that we have some background, let's give you some context. You have an application running on one of your compute nodes, let's say exadb01, that consumes a lot of data from your database that runs on exadb02 and exadb04. This application can be a Golden Gate (GG) instance on a downstream architecture, for example. When a database is accessing data stored in the storage servers, it uses the IB network. However, when some other application such as downstream Golden Gate receive some data from the database, the data is transferred via the public (10Gbps) network. This can cause a huge overhead on the network depending on the volume of data that the downstream GG is receiving. There is a way to configure the infrastructure to support the data transfer from the database to the downstream GG instance via the IB network. Please keep in mind that this works here only because the database and the GG instance are running on the same Exadata system. GG receives data from the database to replicate somewhere else. The data is sent by the database to the downstream GG instance using a log shipping method, meaning one of the log_archive_dest_n parameter is configured to ship logs to the downstream GG instance. The database we have to use in this approach generates ~21TB of redo data per day (~900GB per hour), so it is a huge amount of data to be transferred via 10Gbps network. 900GB/h is 2Gbps, or 20% of the bandwidth. This does not seem like a lot, but this network is shared with other databases and client applications. In the IB network, this would consume only 5% if the IB switches are bonded for failover, or 2.5% if they are bonded for throughput. Take a look at how the network is set in the Exadata to understand the solution: The solution proposed was to offload the data traffic from the Client Access network to the Private InfiniBand network. Since database servers also talk to each other using the InfiniBand Private network, we can use it for some cases and leverage that when it makes sense. You do not want to do that if your environment is starving for I/O and is already consuming almost all the IB network bandwidth. Ok, so configuring the log shipping through the IB network will make the client network breathe again.

The solution proposed was to offload the data traffic from the Client Access network to the Private InfiniBand network. Since database servers also talk to each other using the InfiniBand Private network, we can use it for some cases and leverage that when it makes sense. You do not want to do that if your environment is starving for I/O and is already consuming almost all the IB network bandwidth. Ok, so configuring the log shipping through the IB network will make the client network breathe again.

Implementation

Let's take a look at how to configure the listener over the InfiniBand. I am going to show you some steps here and they can also be used to configure DBLinks over IB. Check how the current configuration is in all your compute nodes: [code] [root@exadb01 ~]# dcli -l root -g ~/dbs_group grep -i sdp /etc/rdma/rdma.conf exadb01: # Load SDP module exadb01: SDP_LOAD=no exadb02: # Load SDP module exadb02: SDP_LOAD=no exadb03: # Load SDP module exadb03: SDP_LOAD=no exadb04: # Load SDP module exadb04: SDP_LOAD=no exadb05: # Load SDP module exadb05: SDP_LOAD=no exadb06: # Load SDP module exadb06: SDP_LOAD=no exadb07: # Load SDP module exadb07: SDP_LOAD=no exadb08: # Load SDP module exadb08: SDP_LOAD=no [/code] Change SDP_LOAD to yes: [code] [root@exadb01 ~]# dcli -g ~/dbs_group -l root sed -i -e 's/SDP_LOAD=no/SDP_LOAD=yes/g' /etc/rdma/rdma.conf [/code] Confirm the parameter was changed in all compute nodes: [code] [root@exadb01 ~]# dcli -l root -g ~/dbs_group grep -i sdp /etc/rdma/rdma.conf exadb01: # Load SDP module exadb01: SDP_LOAD=yes exadb02: # Load SDP module exadb02: SDP_LOAD=yes exadb03: # Load SDP module exadb03: SDP_LOAD=yes exadb04: # Load SDP module exadb04: SDP_LOAD=yes exadb05: # Load SDP module exadb05: SDP_LOAD=yes exadb06: # Load SDP module exadb06: SDP_LOAD=yes exadb07: # Load SDP module exadb07: SDP_LOAD=yes exadb08: # Load SDP module exadb08: SDP_LOAD=yes [/code] Verify if the SDP is set in the /etc/modprobe.d/exadata.conf in all the nodes: [code] [root@exadb01 ~]# dcli -l root -g ~/dbs_group grep -i options /etc/modprobe.d/exadata.conf exadb01: options ipmi_si kcs_obf_timeout=6000000 exadb01: options ipmi_si kcs_ibf_timeout=6000000 exadb01: options ipmi_si kcs_err_retries=20 exadb01: options megaraid_sas resetwaittime=0 exadb01: options rds_rdma rds_ib_active_bonding_enabled=1 exadb02: options ipmi_si kcs_obf_timeout=6000000 exadb02: options ipmi_si kcs_ibf_timeout=6000000 exadb02: options ipmi_si kcs_err_retries=20 exadb02: options megaraid_sas resetwaittime=0 exadb02: options rds_rdma rds_ib_active_bonding_enabled=1 exadb03: options ipmi_si kcs_obf_timeout=6000000 exadb03: options ipmi_si kcs_ibf_timeout=6000000 exadb03: options ipmi_si kcs_err_retries=20 exadb03: options megaraid_sas resetwaittime=0 exadb03: options rds_rdma rds_ib_active_bonding_enabled=1 exadb04: options ipmi_si kcs_obf_timeout=6000000 exadb04: options ipmi_si kcs_ibf_timeout=6000000 exadb04: options ipmi_si kcs_err_retries=20 exadb04: options megaraid_sas resetwaittime=0 exadb04: options rds_rdma rds_ib_active_bonding_enabled=1 exadb05: options ipmi_si kcs_obf_timeout=6000000 exadb05: options ipmi_si kcs_ibf_timeout=6000000 exadb05: options ipmi_si kcs_err_retries=20 exadb05: options megaraid_sas resetwaittime=0 exadb05: options rds_rdma rds_ib_active_bonding_enabled=1 exadb06: options ipmi_si kcs_obf_timeout=6000000 exadb06: options ipmi_si kcs_ibf_timeout=6000000 exadb06: options ipmi_si kcs_err_retries=20 exadb06: options megaraid_sas resetwaittime=0 exadb06: options rds_rdma rds_ib_active_bonding_enabled=1 exadb07: options ipmi_si kcs_obf_timeout=6000000 exadb07: options ipmi_si kcs_ibf_timeout=6000000 exadb07: options ipmi_si kcs_err_retries=20 exadb07: options megaraid_sas resetwaittime=0 exadb07: options rds_rdma rds_ib_active_bonding_enabled=1 exadb08: options ipmi_si kcs_obf_timeout=6000000 exadb08: options ipmi_si kcs_ibf_timeout=6000000 exadb08: options ipmi_si kcs_err_retries=20 exadb08: options megaraid_sas resetwaittime=0 exadb08: options rds_rdma rds_ib_active_bonding_enabled=1 [/code] If it is not yet set, include this at the end of the file in all the nodes: [code] [root@exadb01 ~]# dcli -l root -g ~/dbs_group "echo \"options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0\" >> /etc/modprobe.d/exadata.conf" [/code] Confirm the line was successfully added to the file in all the nodes: [code] [root@exadb01 ~]# dcli -g ~/dbs_group -l root grep -i ib_sdp /etc/modprobe.d/exadata.conf exadb01: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 exadb02: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 exadb03: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 exadb04: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 exadb05: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 exadb06: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 exadb07: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 exadb08: options ib_sdp sdp_zcopy_thresh=0 sdp_apm_enable=0 recv_poll=0 [/code] Confirm SDP is enabled (set as both) in the /etc/libsdp.conf: [code] [root@exadb01 ~]# dcli -g ~/dbs_group -l root grep ^use /etc/libsdp.conf exadb01: use both server * *:* exadb01: use both client * *:* exadb02: use both server * *:* exadb02: use both client * *:* exadb03: use both server * *:* exadb03: use both client * *:* exadb04: use both server * *:* exadb04: use both client * *:* exadb05: use both server * *:* exadb05: use both client * *:* exadb06: use both server * *:* exadb06: use both client * *:* exadb07: use both server * *:* exadb07: use both client * *:* exadb08: use both server * *:* exadb08: use both client * *:* [/code] Now reboot all the nodes to take effect. At this time you only need to reboot the nodes you would like to use the feature. In this first part, we discussed why we need a listener over IB and we also configured all the parameters needed at the OS level to get SDP (Sockets Direct Protocol) to work. The next part will show how to create the listener itself. I hope you enjoyed reading!On this page

Share this

Share this

More resources

Learn more about Pythian by reading the following blogs and articles.

Monitoring apache Cassandra metrics with Graphite and Grafana

![]()

Monitoring apache Cassandra metrics with Graphite and Grafana

Jun 30, 2016 12:00:00 AM

8

min read

Monitoring your 5.7 InnoDB cluster status

![]()

Monitoring your 5.7 InnoDB cluster status

Feb 4, 2019 12:00:00 AM

4

min read

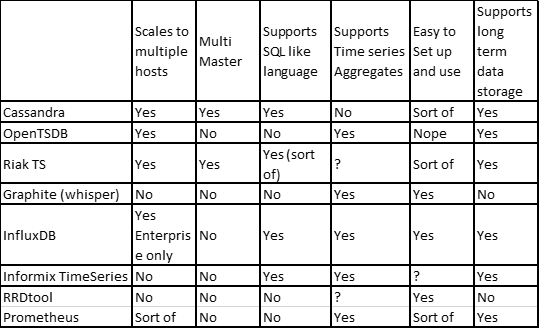

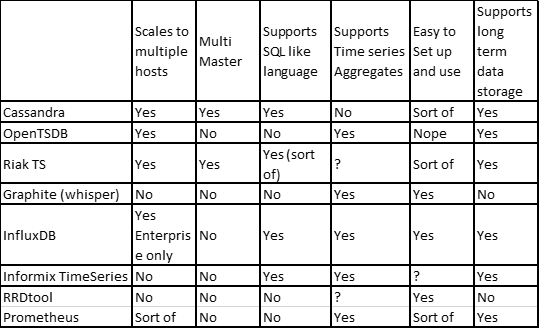

Cassandra as a time series database

Cassandra as a time series database

Mar 23, 2018 12:00:00 AM

6

min read

Ready to unlock value from your data?

With Pythian, you can accomplish your data transformation goals and more.